2025

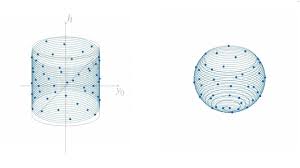

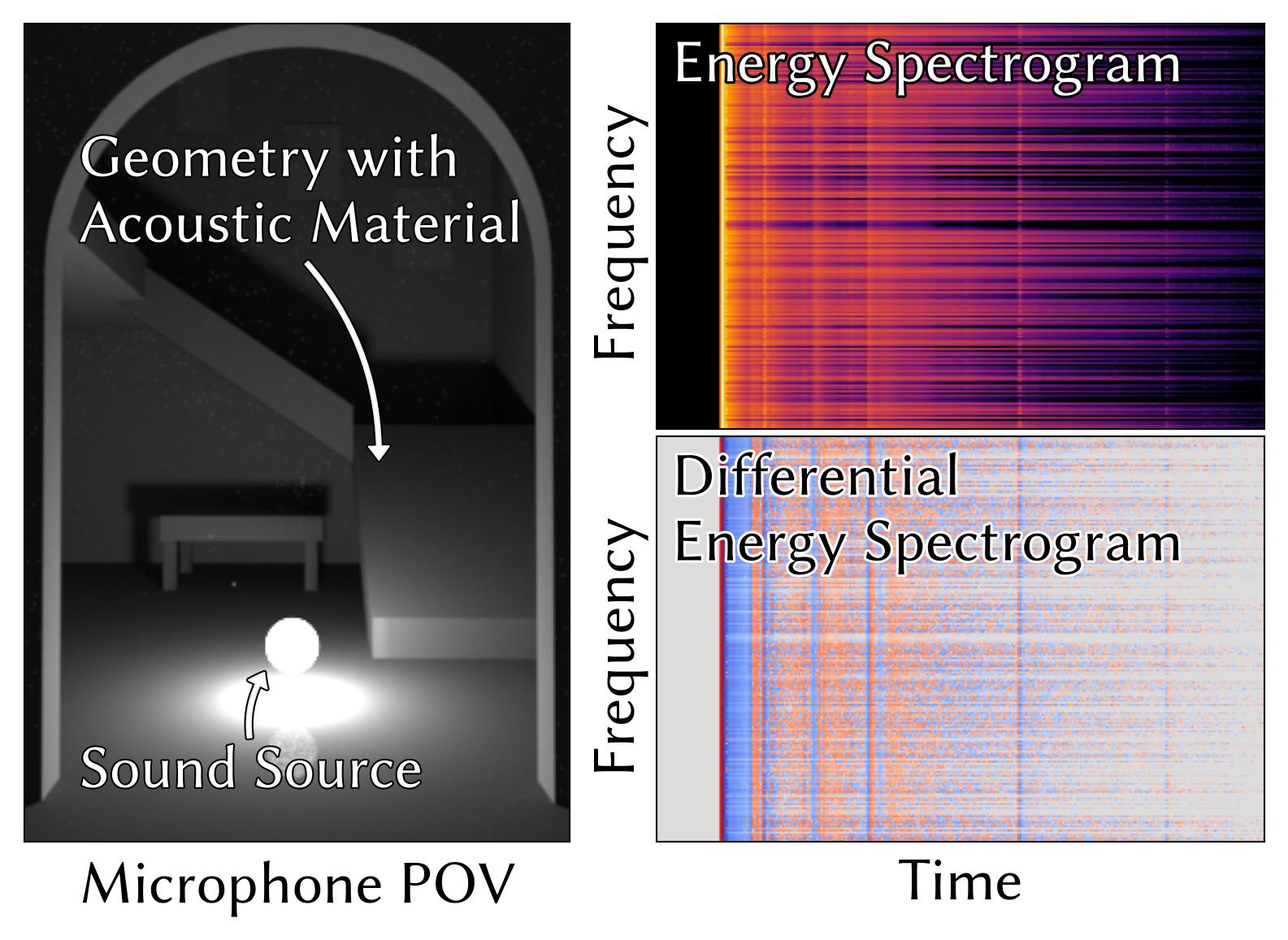

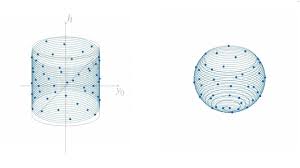

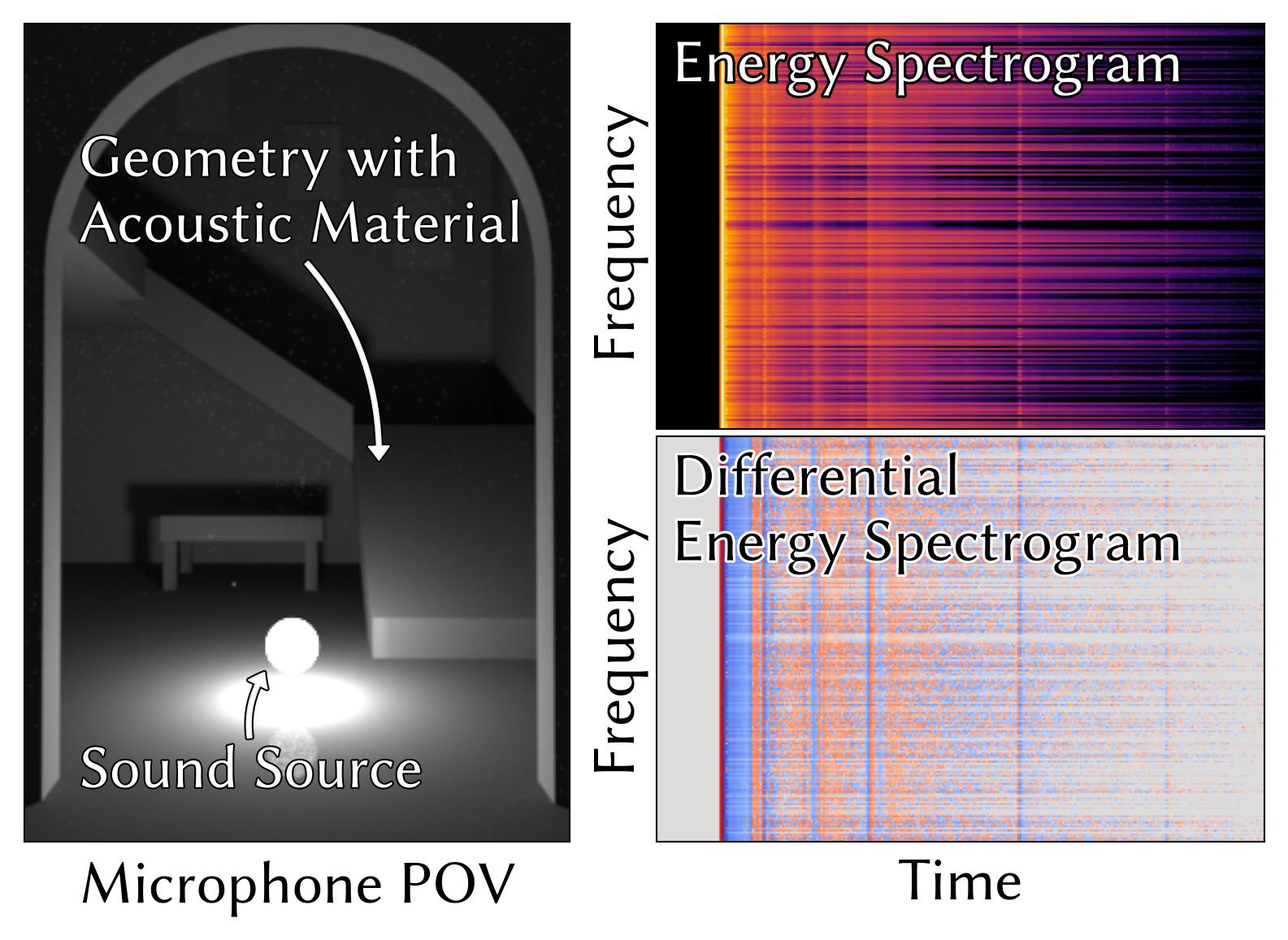

Differentiable Geometric Acoustic Path Tracing using Time-Resolved Path Replay Backpropagation (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

Differentiable Geometric Acoustic Path Tracing using Time-Resolved Path Replay Backpropagation (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

@article{Finnendahl_and_Worchel:2025:DiffAcousticPT,

author = {Finnendahl, Ugo and Worchel, Markus and Jüterbock, Tobias and Wujecki, Daniel and Brinkmann, Fabian and Weinzierl, Stefan and Alexa, Marc},

title = {Differentiable Geometric Acoustic Path Tracing using Time-Resolved Path Replay Backpropagation},

year = {2025},

issue_date = {August 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {44},

number = {4},

issn = {},

url = {https://doi.org/10.1145/3730900},

doi = {10.1145/3730900},

abstract = {},

journal = {ACM Trans. Graph.},

month = {aug},

articleno = {},

numpages = {},

keywords = {differentiable rendering, geometrical acoustics, physically-based simulation, acoustic optimization}

}

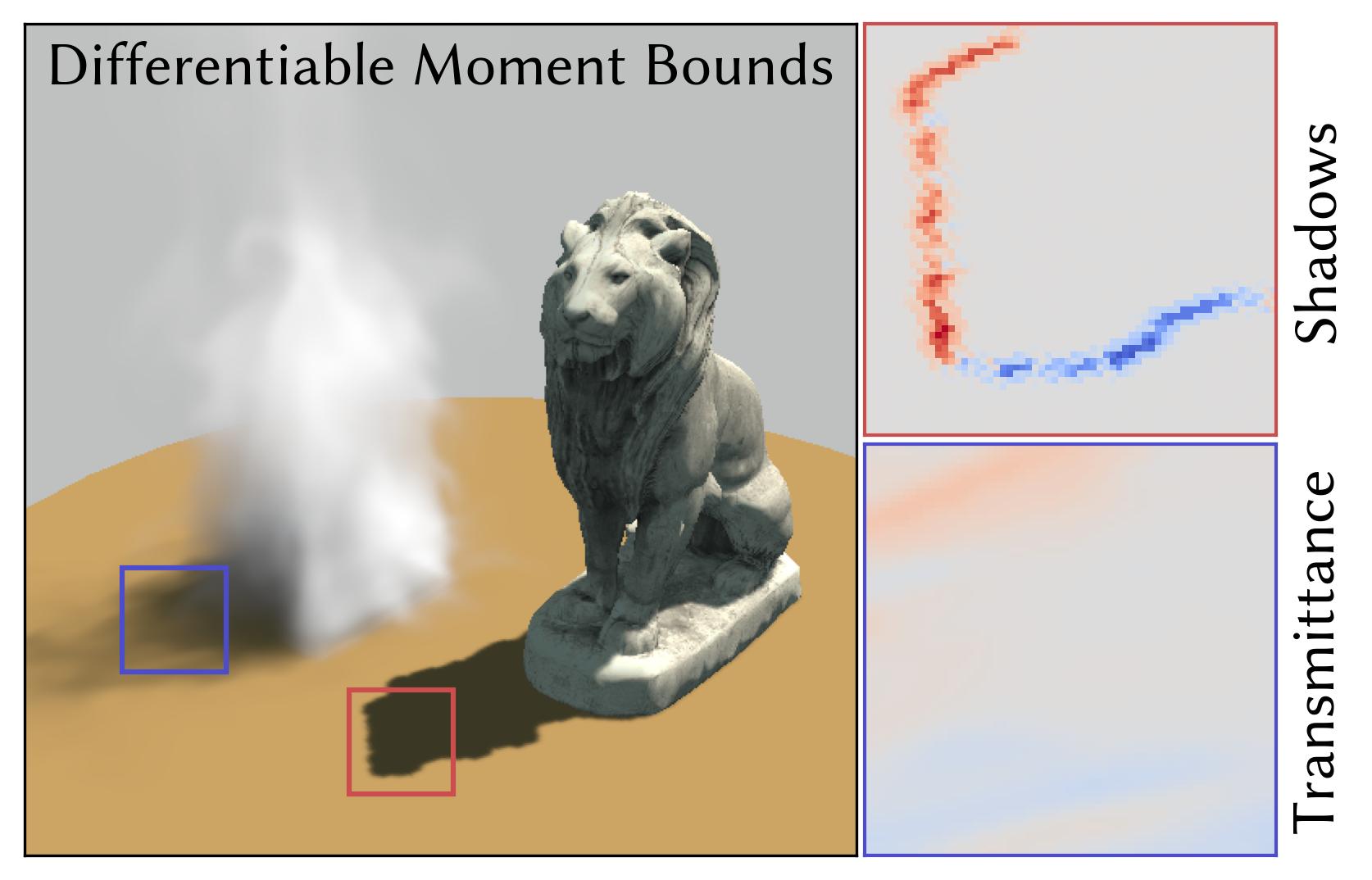

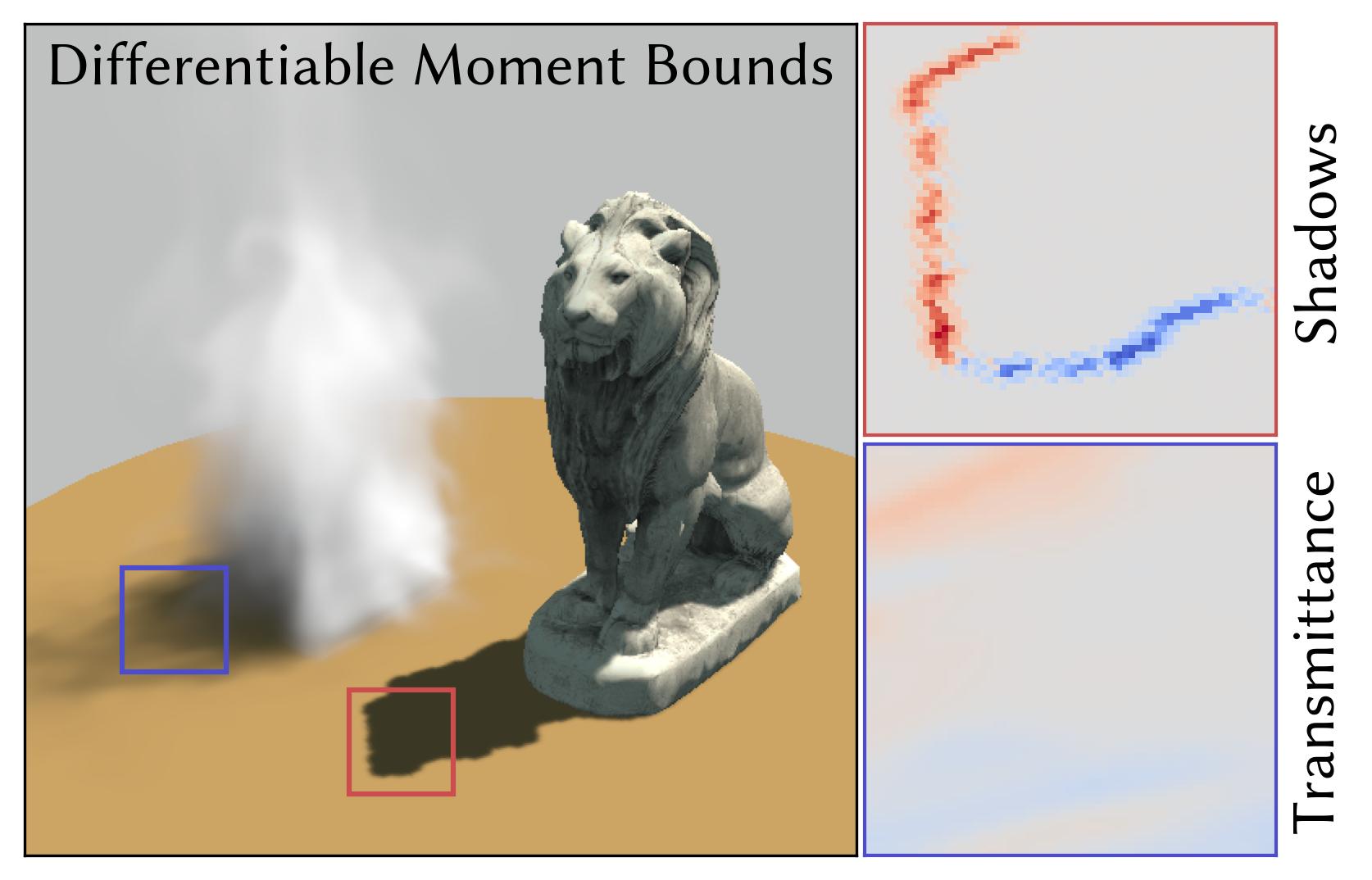

Moment Bounds are Differentiable: Efficiently Approximating Measures in Inverse Rendering (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

Moment Bounds are Differentiable: Efficiently Approximating Measures in Inverse Rendering (2025)

ACM Transactions on Graphics (Proc. of SIGGRAPH)

@article{worchel:2025:diffmoments,

author = {Worchel, Markus and Alexa, Marc},

title = {Moment Bounds are Differentiable: Efficiently Approximating Measures in Inverse Rendering},

year = {2025},

issue_date = {August 2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {44},

number = {4},

issn = {},

url = {https://doi.org/10.1145/3730899},

doi = {10.1145/3730899},

abstract = {},

journal = {ACM Trans. Graph.},

month = {aug},

articleno = {},

numpages = {},

keywords = {differentiable rendering, shadows}

}

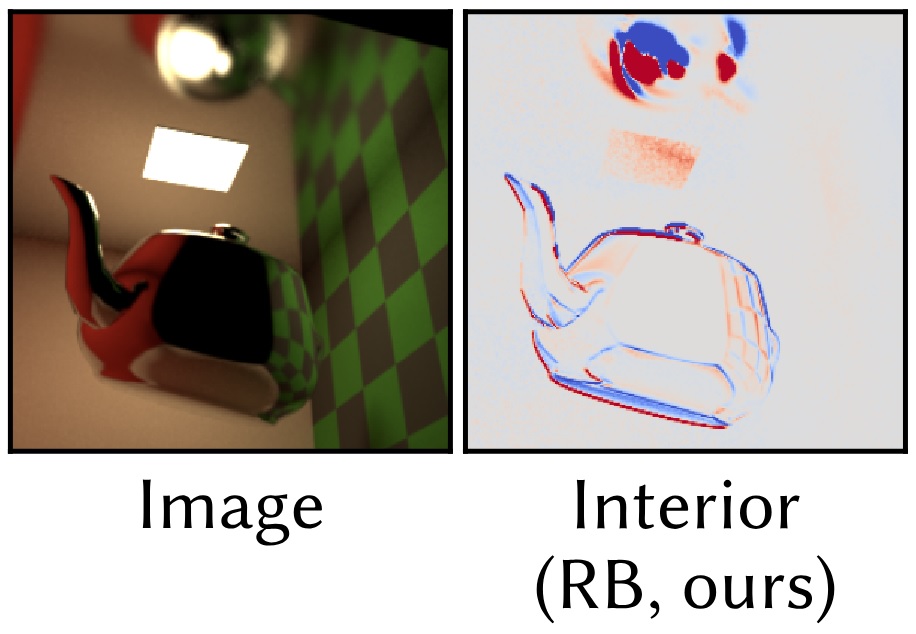

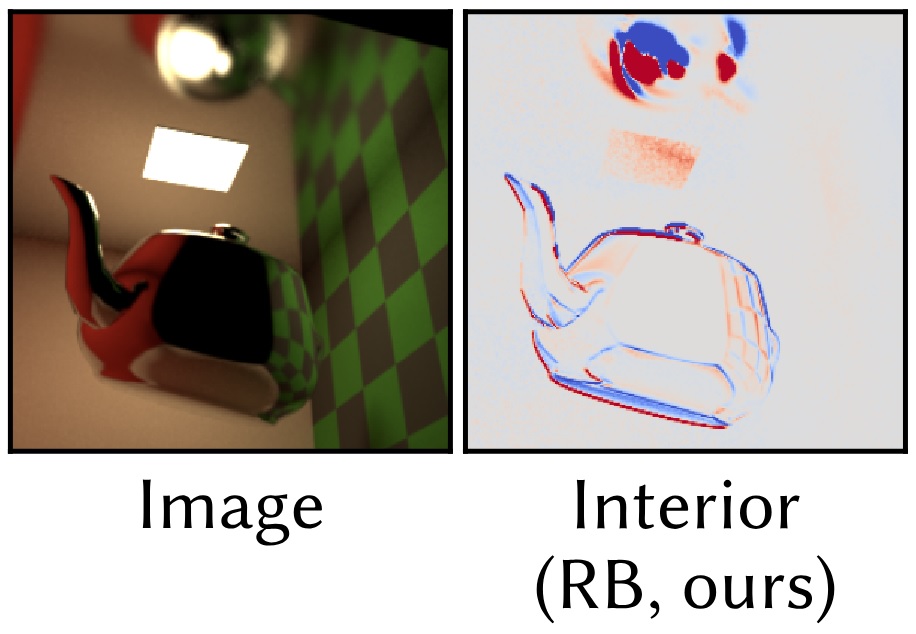

Radiative Backpropagation with Non-Static Geometry (2025)

Eurographics Symposium on Rendering (EGSR)

Radiative Backpropagation with Non-Static Geometry (2025)

Eurographics Symposium on Rendering (EGSR)

@inproceedings{10.2312:sr.20251198,

booktitle = {Eurographics Symposium on Rendering},

editor = {Wang, Beibei and Wilkie, Alexander},

title = {{Radiative Backpropagation with Non-Static Geometry}},

author = {Worchel, Markus and Finnendahl, Ugo and Alexa, Marc},

year = {2025},

publisher = {The Eurographics Association},

ISSN = {1727-3463},

ISBN = {978-3-03868-292-9},

DOI = {10.2312/sr.20251198}

}

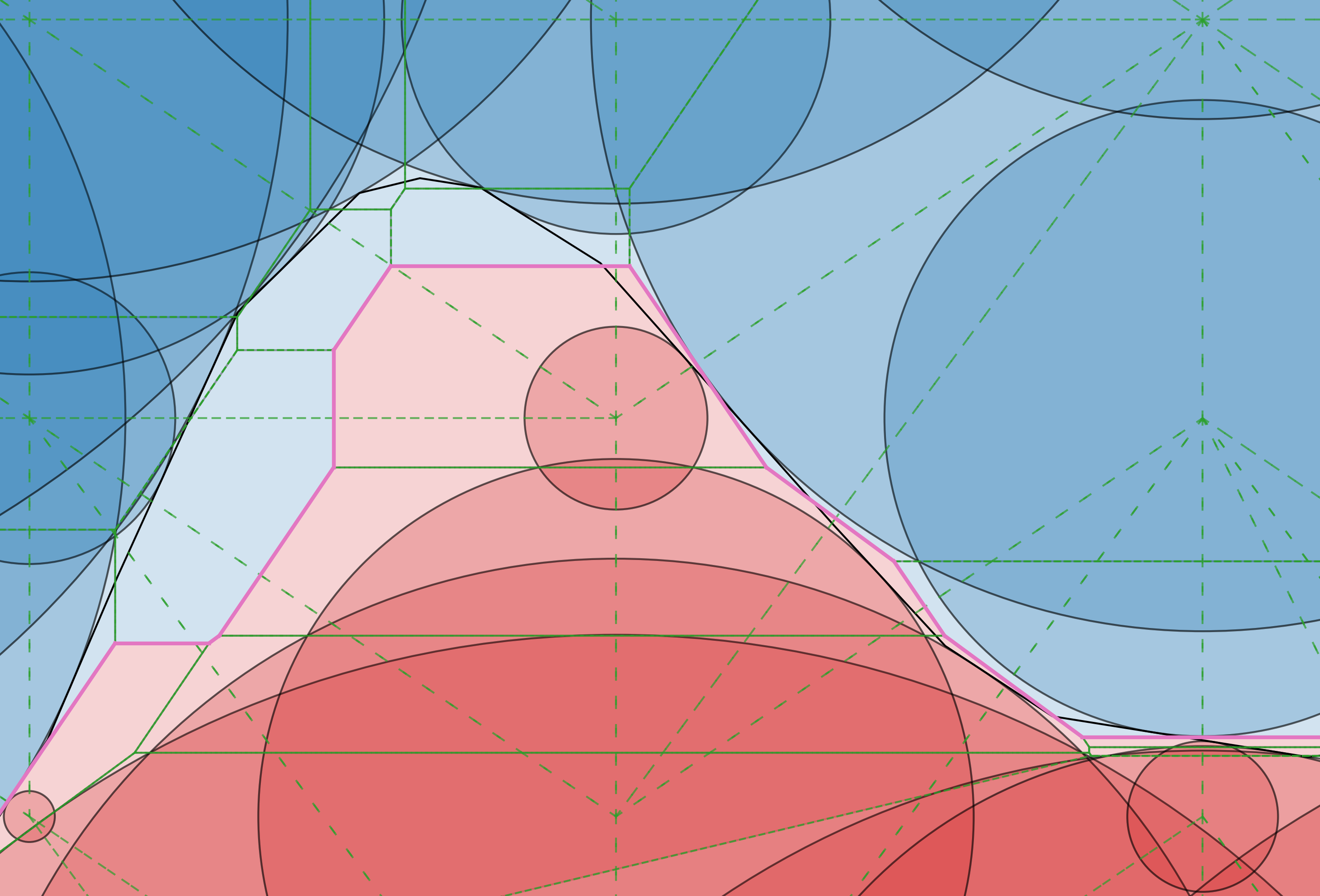

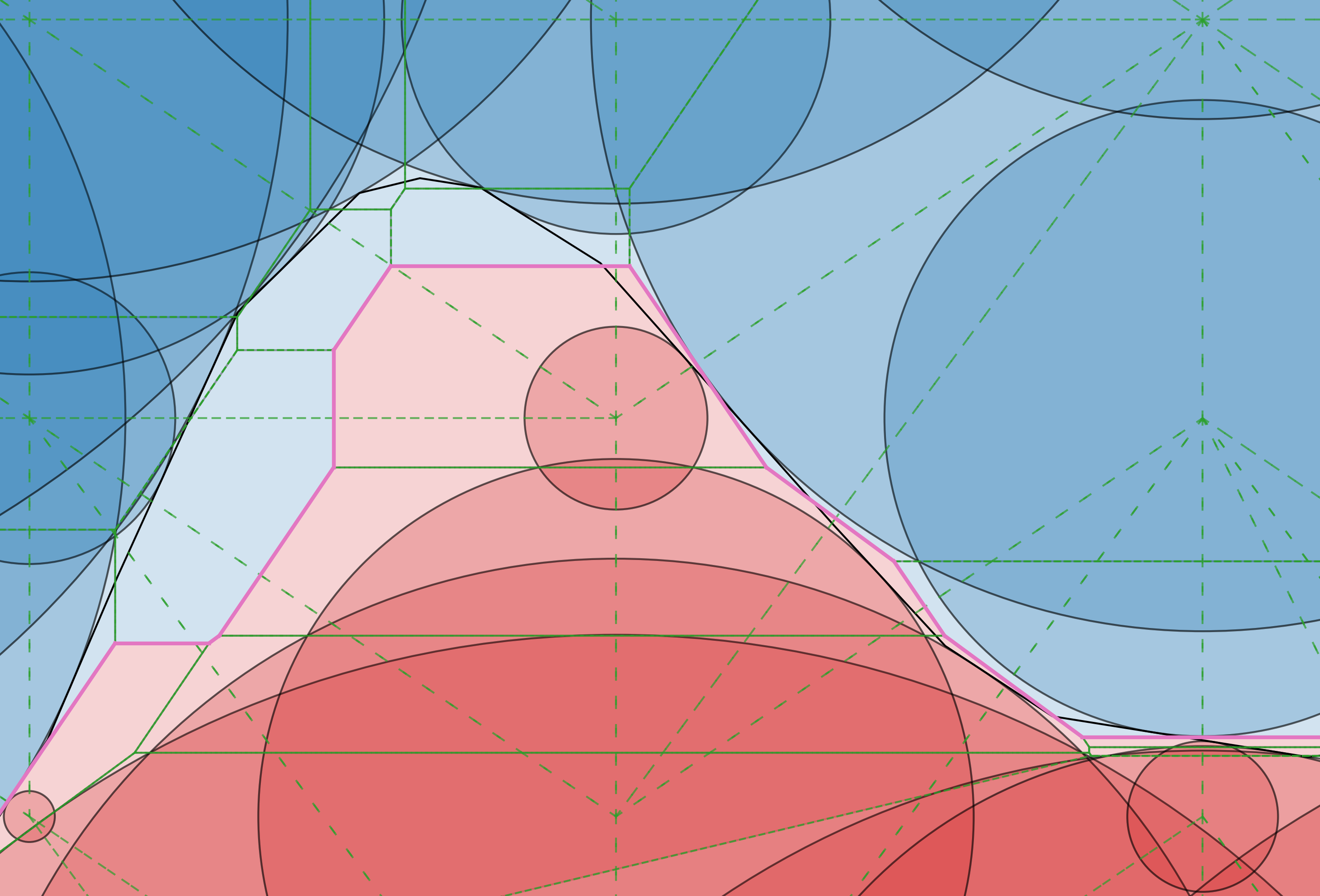

Isosurface Extraction for Signed Distance Functions using Power Diagrams (2025)

Eurographics 2025

Isosurface Extraction for Signed Distance Functions using Power Diagrams (2025)

Eurographics 2025

2024

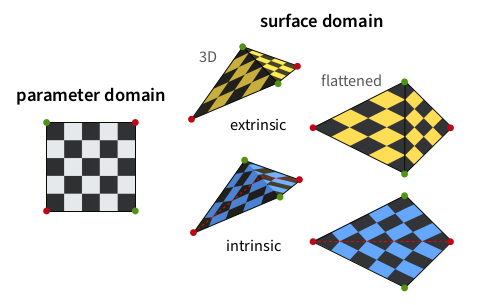

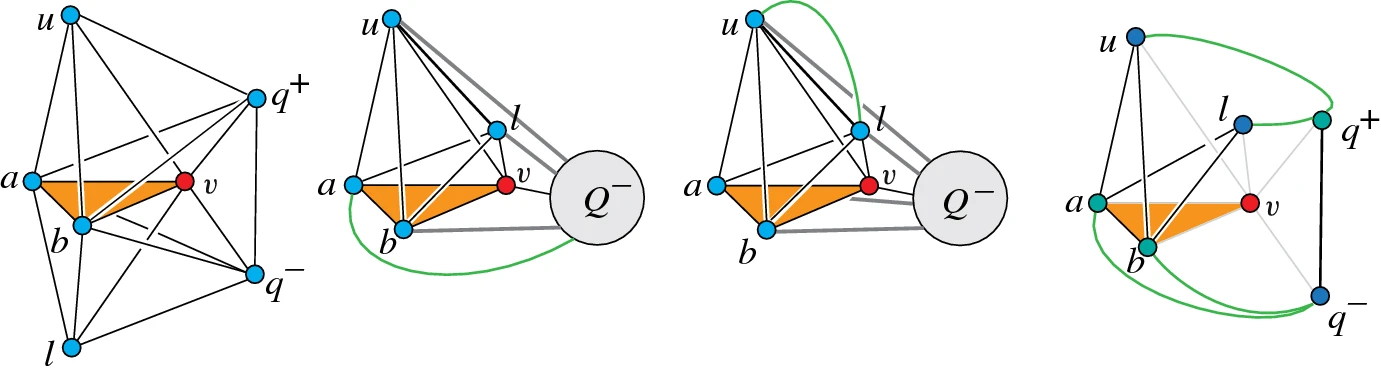

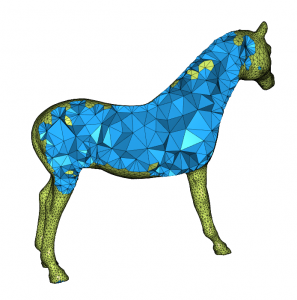

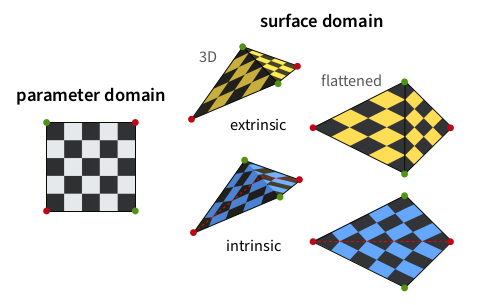

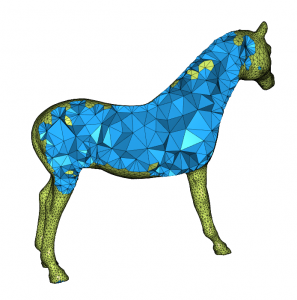

Mesh Parameterization meets Intrinsic Triangulations (2024)

Computer Graphics Forum

Mesh Parameterization meets Intrinsic Triangulations (2024)

Computer Graphics Forum

@article{Akalin:2024:MPIT,

author = {Akalin, Koray and Finnendahl, Ugo and Sorkine-Hornung, Olga and Alexa, Marc},

title = {Mesh Parameterization Meets Intrinsic Triangulations},

journal = {Computer Graphics Forum},

volume = {43},

number = {5},

pages = {e15134},

keywords = {CCS Concepts, ⢠Computing methodologies â Computer graphics, Mesh models, Mesh geometry models},

doi = {https://doi.org/10.1111/cgf.15134},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.15134},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.15134},

abstract = {A parameterization of a triangle mesh is a realization in the plane so that all triangles have positive signed area. Triangle mesh parameterizations are commonly computed by minimizing a distortion energy, measuring the distortions of the triangles as they are mapped into the parameter domain. It is assumed that the triangulation is fixed and the triangles are mapped affinely. We consider a more general setup and additionally optimize among the intrinsic triangulations of the piecewise linear input geometry. This means the distortion energy is computed for the same geometry, yet the space of possible parameterizations is enlarged. For minimizing the distortion energy, we suggest alternating between varying the parameter locations of the vertices and intrinsic flipping. We show that this process improves the mapping for different distortion energies at moderate additional cost. We also find intrinsic triangulations that are better starting points for the optimization of positions, offering a compromise between the full optimization approach and exploiting the additional freedom of intrinsic triangulations.},

year = {2024}

}

Fitting Flats to Flats (2024)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

Fitting Flats to Flats (2024)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

@InProceedings{Dogadov:2024:Flats,

author = {Dogadov, Gabriel and Finnendahl, Ugo and Alexa, Marc},

title = {Fitting Flats to Flats},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024}

}

Polygon Laplacian Made Robust (2024)

Polygon Laplacian Made Robust (2024)

- Astrid Bunge

- Dennis Bukenberger

- Sven Wagner

- Marc Alexa

- Mario Botsch

Computer Graphics Forum

@article{Bunge:2024:PLMR,

author = {Bunge, Astrid and Bukenberger, Dennis R. and Wagner, Sven D. and Alexa, Marc and Botsch, Mario},

title = {Polygon Laplacian Made Robust},

journal = {Computer Graphics Forum},

volume = {43},

number = {2},

pages = {e15025},

keywords = {Mesh geometry models, Mesh generation, Discretization},

doi = {https://doi.org/10.1111/cgf.15025},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.15025},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.15025},

abstract = {Abstract Discrete Laplacians are the basis for various tasks in geometry processing. While the most desirable properties of the discretization invariably lead to the so-called cotangent Laplacian for triangle meshes, applying the same principles to polygon Laplacians leaves degrees of freedom in their construction. From linear finite elements it is well-known how the shape of triangles affects both the error and the operator's condition. We notice that shape quality can be encapsulated as the trace of the Laplacian and suggest that trace minimization is a helpful tool to improve numerical behavior. We apply this observation to the polygon Laplacian constructed from a virtual triangulation [BHKB20] to derive optimal parameters per polygon. Moreover, we devise a smoothing approach for the vertices of a polygon mesh to minimize the trace. We analyze the properties of the optimized discrete operators and show their superiority over generic parameter selection in theory and through various experiments.},

year = {2024}

}

2023

K-Surfaces: Bézier-Splines Interpolating at Gaussian Curvature Extrema (2023)

ACM Transactions on Graphics (Proc. of SIGGRAPH Asia)

K-Surfaces: Bézier-Splines Interpolating at Gaussian Curvature Extrema (2023)

ACM Transactions on Graphics (Proc. of SIGGRAPH Asia)

@article{10.1145/3618383,

author = {Djuren, Tobias and Kohlbrenner, Maximilian and Alexa, Marc},

title = {K-Surfaces: B\'{e}zier-Splines Interpolating at Gaussian Curvature Extrema},

year = {2023},

issue_date = {December 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {6},

issn = {0730-0301},

url = {https://doi.org/10.1145/3618383},

doi = {10.1145/3618383},

journal = {ACM Trans. Graph.},

month = {dec},

articleno = {210},

numpages = {13},

keywords = {interactive surface modeling, b\'{e}zier patches, gaussian curvature, b\'{e}zier splines}

}

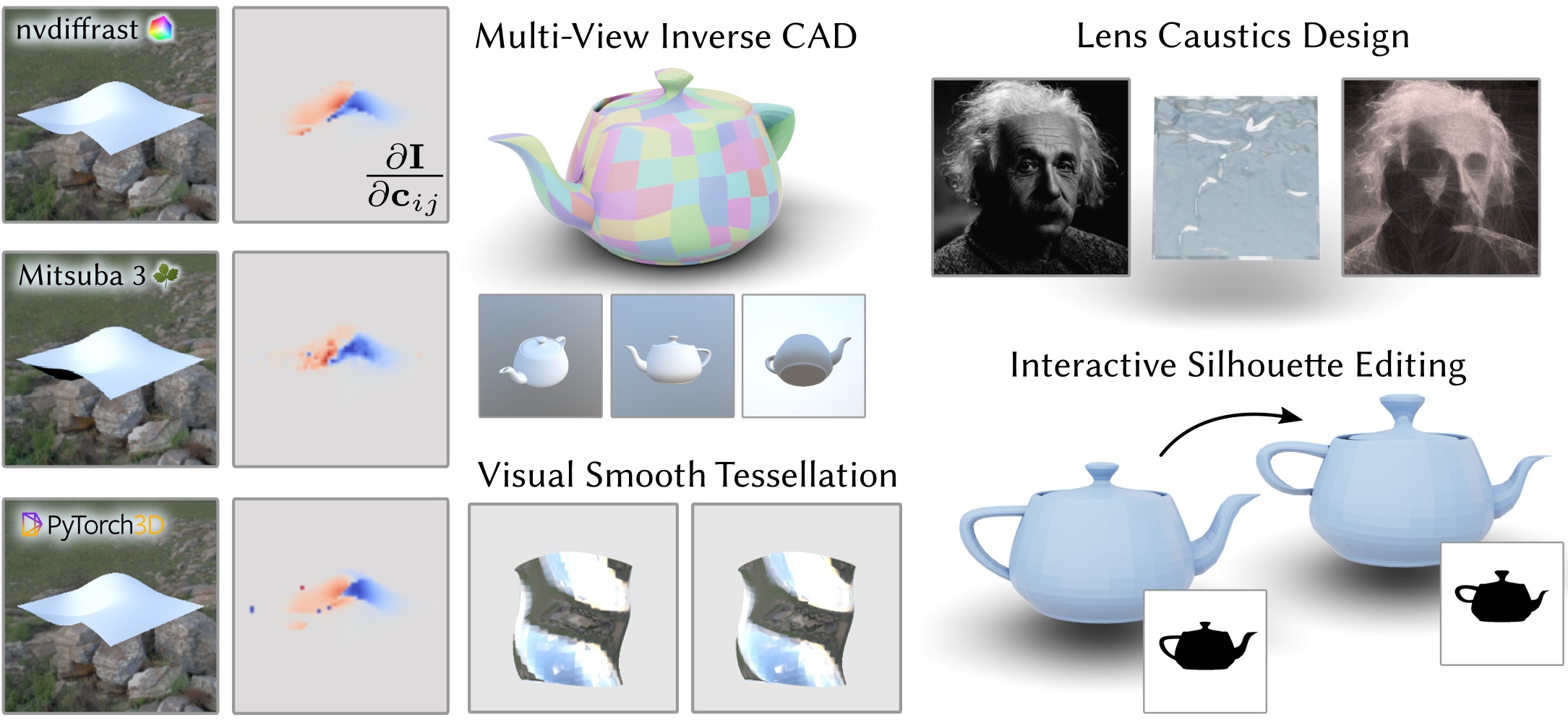

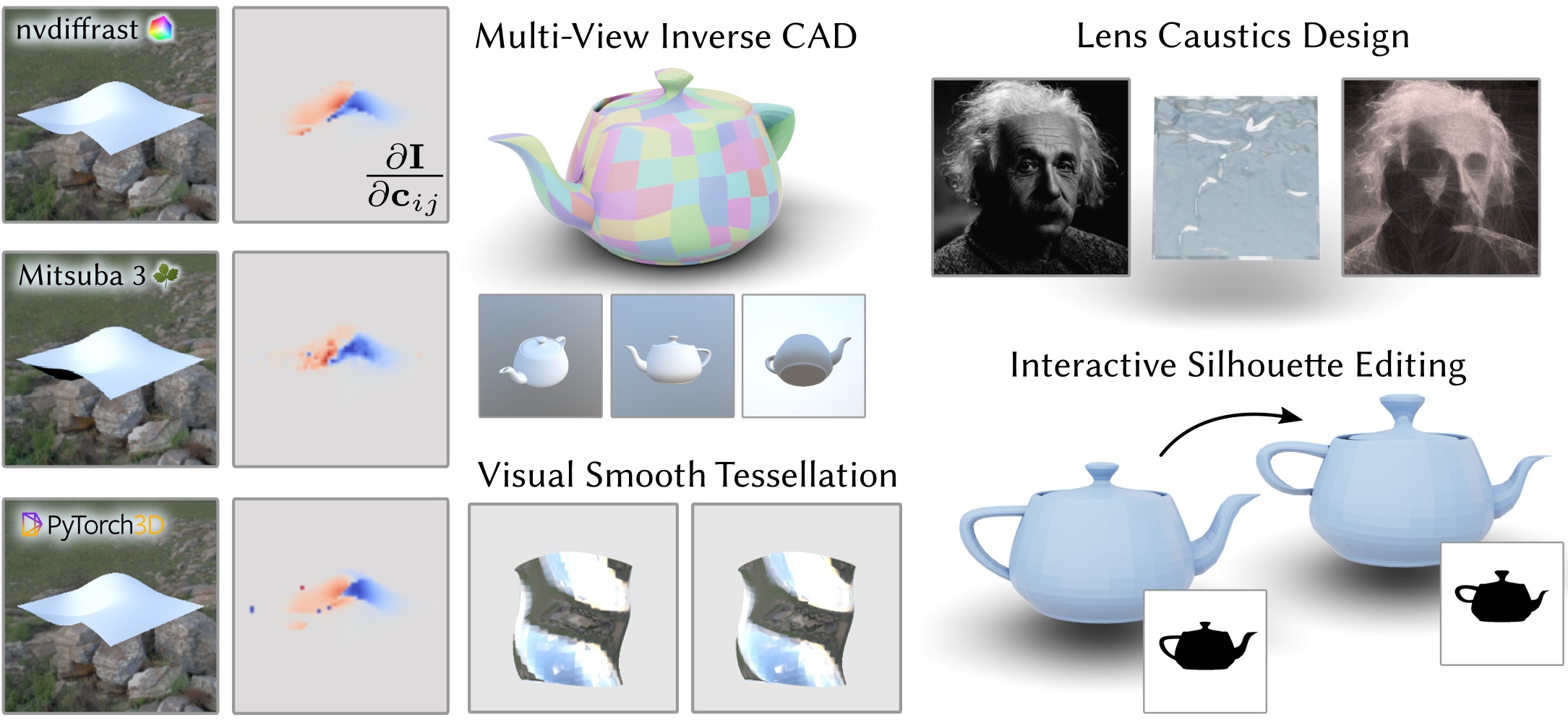

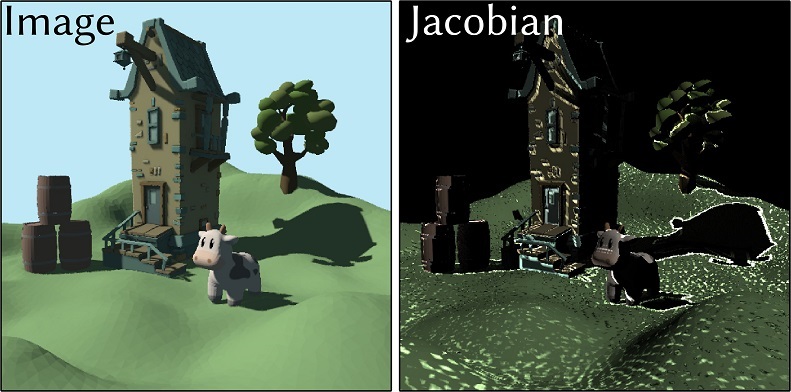

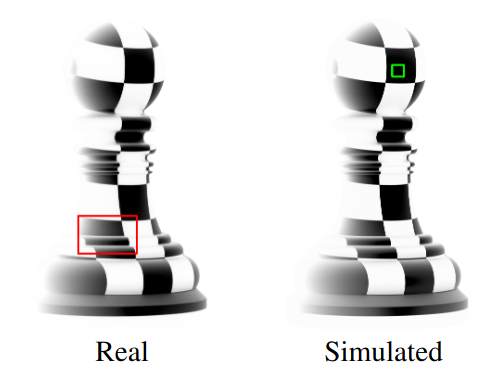

Differentiable Rendering of Parametric Geometry (2023)

ACM Transactions on Graphics (Proc. of SIGGRAPH Asia)

Differentiable Rendering of Parametric Geometry (2023)

ACM Transactions on Graphics (Proc. of SIGGRAPH Asia)

@article{Worchel:2023:DRPG,

author = {Worchel, Markus and Alexa, Marc},

title = {Differentiable Rendering of Parametric Geometry},

year = {2023},

issue_date = {December 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {6},

issn = {0730-0301},

url = {https://doi.org/10.1145/3618387},

doi = {10.1145/3618387},

abstract = {We propose an efficient method for differentiable rendering of parametric surfaces and curves, which enables their use in inverse graphics problems. Our central observation is that a representative triangle mesh can be extracted from a continuous parametric object in a differentiable and efficient way. We derive differentiable meshing operators for surfaces and curves that provide varying levels of approximation granularity. With triangle mesh approximations, we can readily leverage existing machinery for differentiable mesh rendering to handle parametric geometry. Naively combining differentiable tessellation with inverse graphics settings lacks robustness and is prone to reaching undesirable local minima. To this end, we draw a connection between our setting and the optimization of triangle meshes in inverse graphics and present a set of optimization techniques, including regularizations and coarse-to-fine schemes. We show the viability and efficiency of our method in a set of image-based computer-aided design applications.},

journal = {ACM Trans. Graph.},

month = {dec},

articleno = {232},

numpages = {18},

keywords = {differentiable rendering, geometry reconstruction}

}

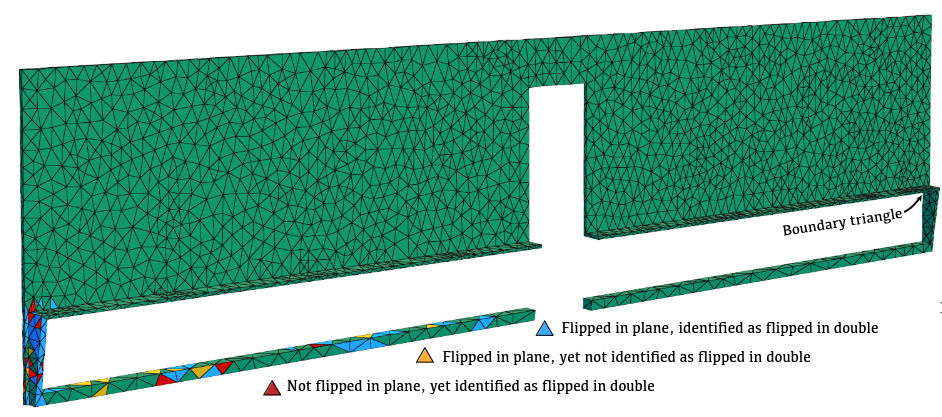

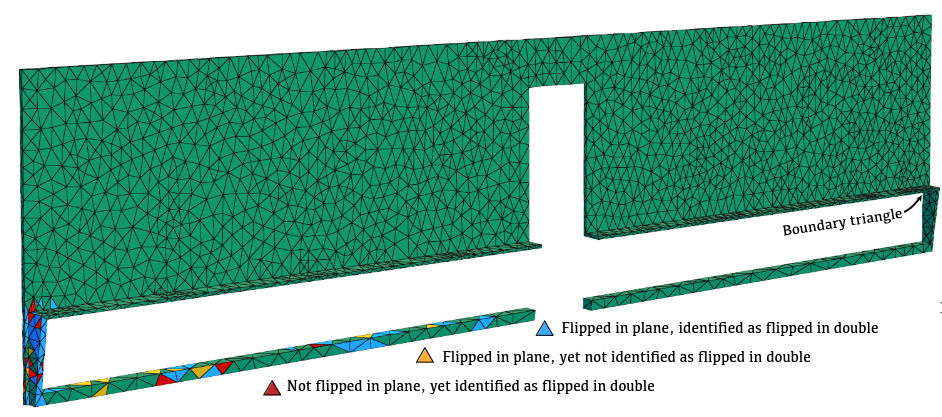

Efficient Embeddings in Exact Arithmetic (2023)

ACM Transactions on Graphics

Efficient Embeddings in Exact Arithmetic (2023)

ACM Transactions on Graphics

@article{10.1145/3592445,

author = {Finnendahl, Ugo and Bogiokas, Dimitrios and Robles Cervantes, Pablo and Alexa, Marc},

title = {Efficient Embeddings in Exact Arithmetic},

year = {2023},

issue_date = {August 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3592445},

doi = {10.1145/3592445},

abstract = {We provide a set of tools for generating planar embeddings of triangulated topological spheres. The algorithms make use of Schnyder labelings and realizers. A new representation of the realizer based on dual trees leads to a simple linear time algorithm mapping from weights per triangle to barycentric coordinates and, more importantly, also in the reverse direction. The algorithms can be implemented so that all coefficients involved are 1 or -1. This enables integer computation, making all computations exact. Being a Schnyder realizer, mapping from positive triangle weights guarantees that the barycentric coordinates form an embedding. The reverse direction enables an algorithm for fixing flipped triangles in planar realizations, by mapping from coordinates to weights and adjusting the weights (without forcing them to be positive). In a range of experiments, we demonstrate that all algorithms are orders of magnitude faster than existing robust approaches.},

journal = {ACM Trans. Graph.},

month = {jul},

articleno = {71},

numpages = {17},

keywords = {integer coordinates, parametrization, schnyder labeling}

}

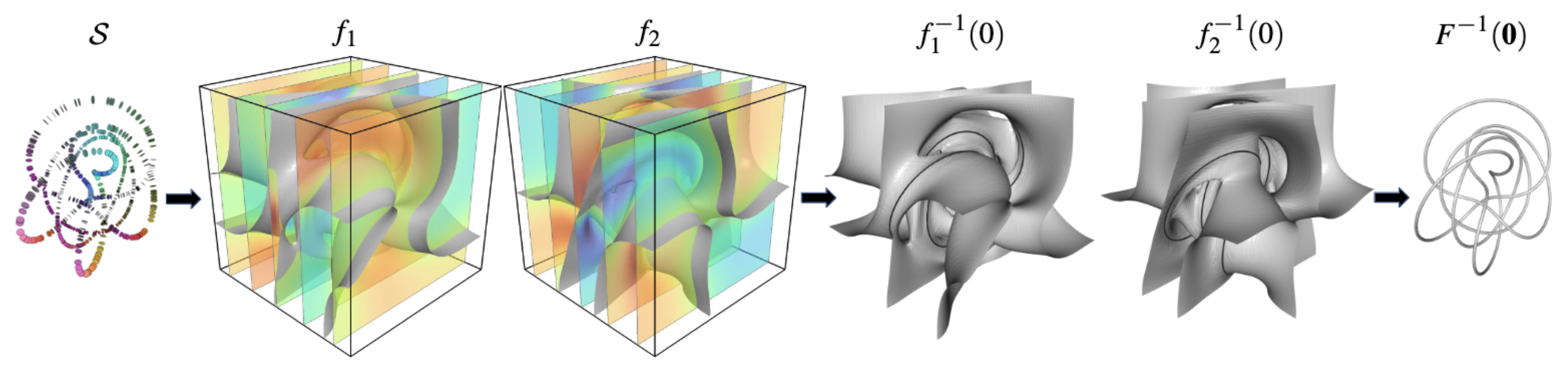

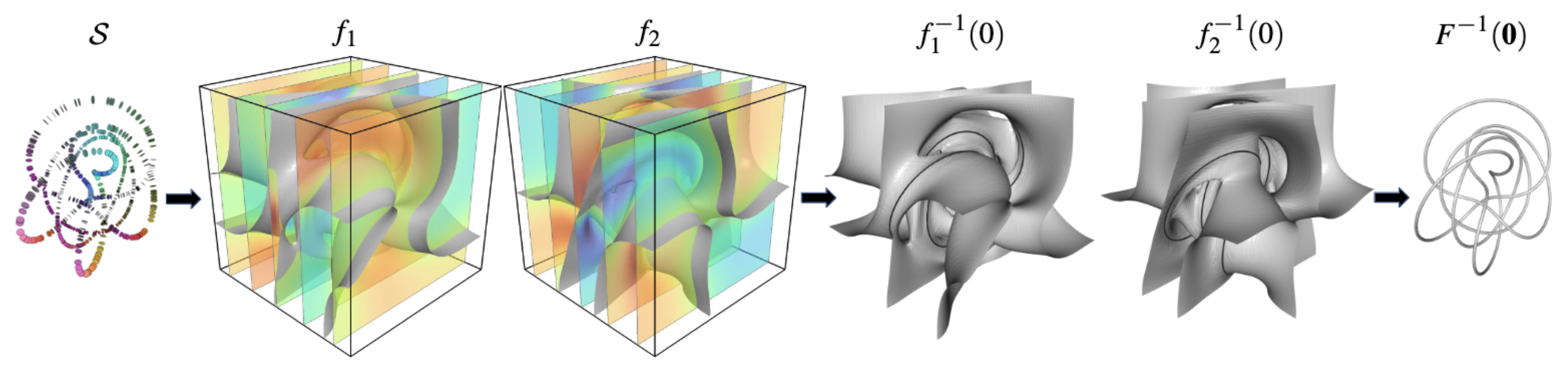

Poisson Manifold Reconstruction — Beyond Co-dimension One (2023)

Computer Graphics Forum

Poisson Manifold Reconstruction — Beyond Co-dimension One (2023)

Computer Graphics Forum

@article{https://doi.org/10.1111/cgf.14907,

author = {Kohlbrenner, M. and Lee, S. and Alexa, M. and Kazhdan, M.},

title = {Poisson Manifold Reconstruction — Beyond Co-dimension One},

journal = {Computer Graphics Forum},

volume = {42},

number = {5},

pages = {e14907},

keywords = {CCS Concepts, • Computing methodologies → Shape modeling, • Mathematics of computing → Nonlinear equations, Numerical analysis, curve and surface reconstruction, sub-manifold reconstruction, exterior product, polynomial optimization},

doi = {https://doi.org/10.1111/cgf.14907},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.14907},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.14907},

abstract = {Abstract Screened Poisson Surface Reconstruction creates 2D surfaces from sets of oriented points in 3D (and can be extended to co-dimension one surfaces in arbitrary dimensions). In this work we generalize the technique to manifolds of co-dimension larger than one. The reconstruction problem consists of finding a vector-valued function whose zero set approximates the input points. We argue that the right extension of screened Poisson Surface Reconstruction is based on exterior products: the orientation of the point samples is encoded as the exterior product of the local normal frame. The goal is to find a set of scalar functions such that the exterior product of their gradients matches the exterior products prescribed by the input points. We show that this setup reduces to the standard formulation for co-dimension 1, and leads to more challenging multi-quadratic optimization problems in higher co-dimension. We explicitly treat the case of co-dimension 2, i.e., curves in 3D and 2D surfaces in 4D. We show that the resulting bi-quadratic problem can be relaxed to a set of quadratic problems in two variables and that the solution can be made effective and efficient by leveraging a hierarchical approach.},

year = {2023}

}

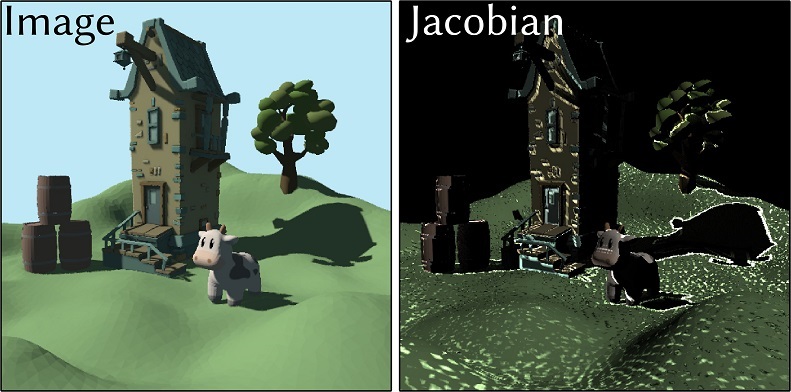

Differentiable Shadow Mapping for Efficient Inverse Graphics (2023)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

Differentiable Shadow Mapping for Efficient Inverse Graphics (2023)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

@inproceedings{worchel:2023:diff_shadow,

title = {Differentiable Shadow Mapping for Efficient Inverse Graphics},

author = {Markus Worchel and Marc Alexa},

url = {https://openaccess.thecvf.com/content/CVPR2023/html/Worchel_Differentiable_Shadow_Mapping_for_Efficient_Inverse_Graphics_CVPR_2023_paper.html, CVF Open Access Version

https://mworchel.github.io/differentiable-shadow-mapping/, Project Page

https://github.com/mworchel/differentiable-shadow-mapping, Code},

year = {2023},

date = {2023-06-01},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {142-153},

abstract = {We show how shadows can be efficiently generated in differentiable rendering of triangle meshes. Our central observation is that pre-filtered shadow mapping, a technique for approximating shadows based on rendering from the perspective of a light, can be combined with existing differentiable rasterizers to yield differentiable visibility information. We demonstrate at several inverse graphics problems that differentiable shadow maps are orders of magnitude faster than differentiable light transport simulation with similar accuracy -- while differentiable rasterization without shadows often fails to converge. },

keywords = {computer graphics, differentiable rendering, machine learning, neural rendering},

pubstate = {published},

tppubtype = {inproceedings}

}

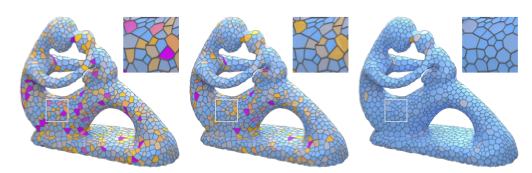

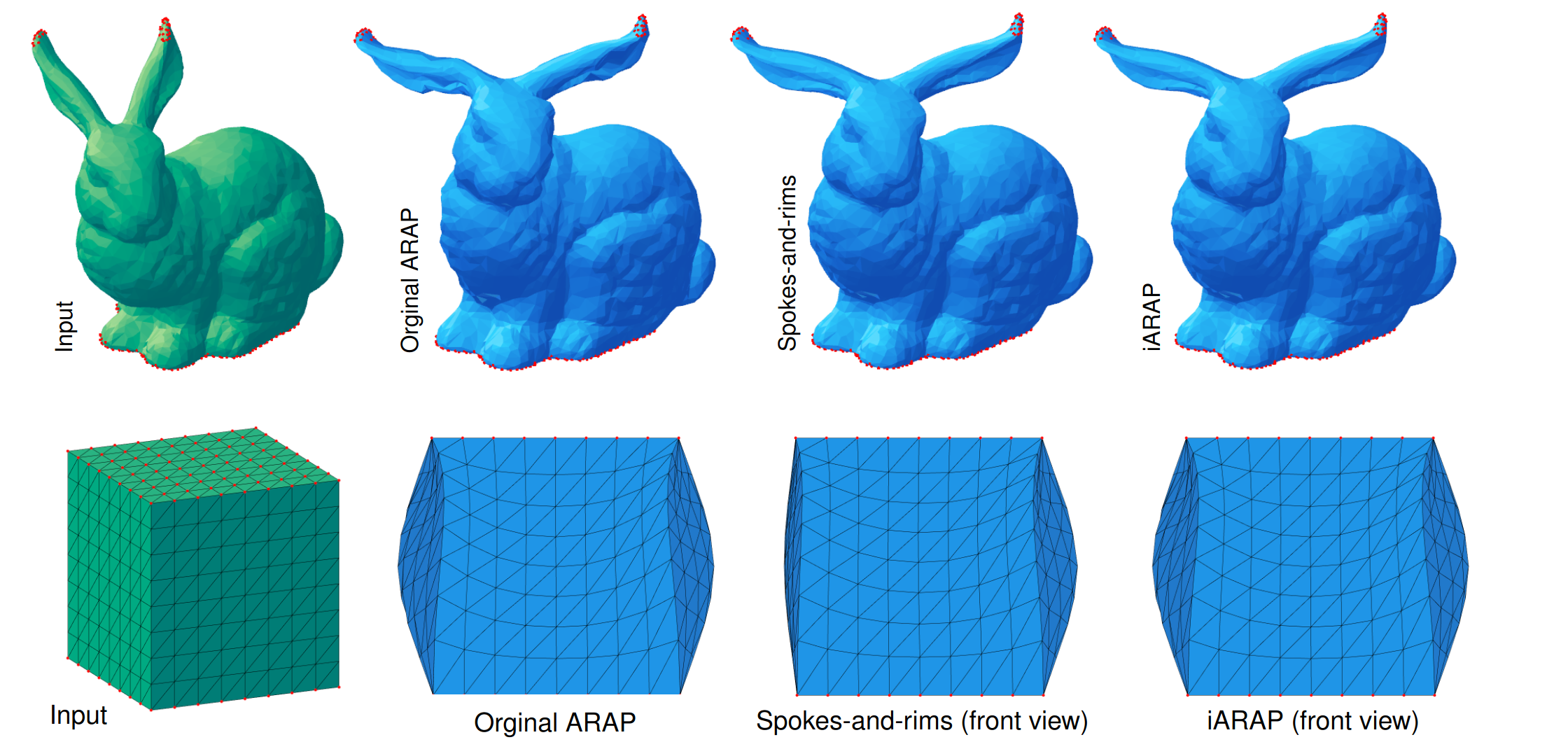

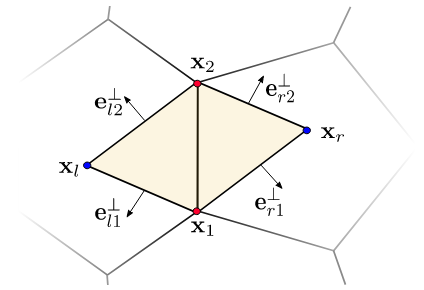

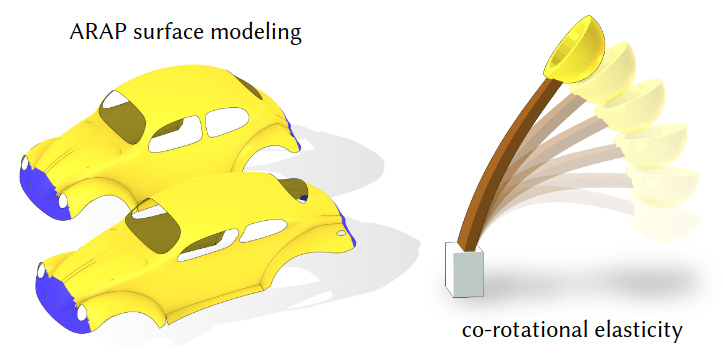

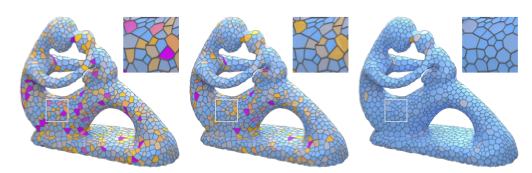

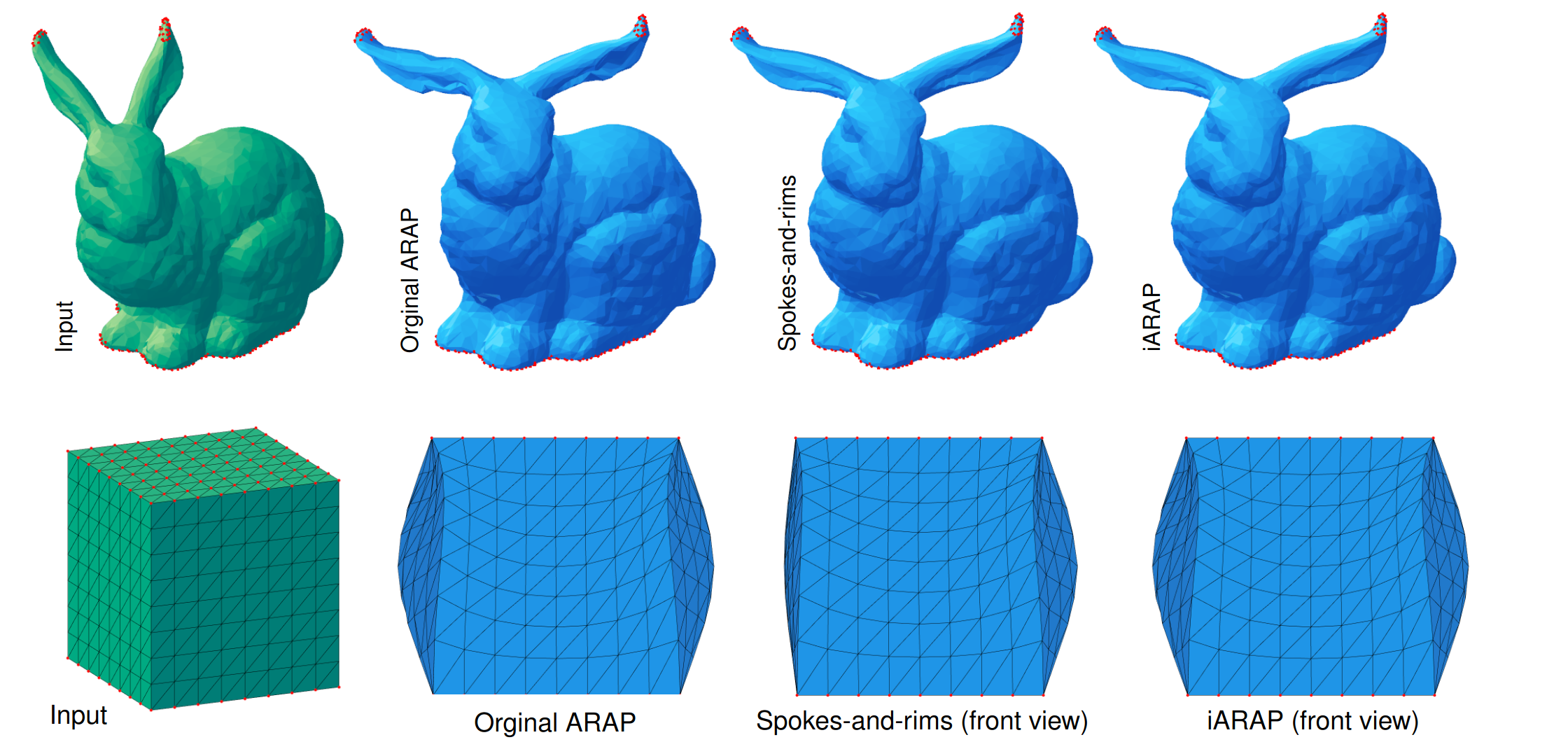

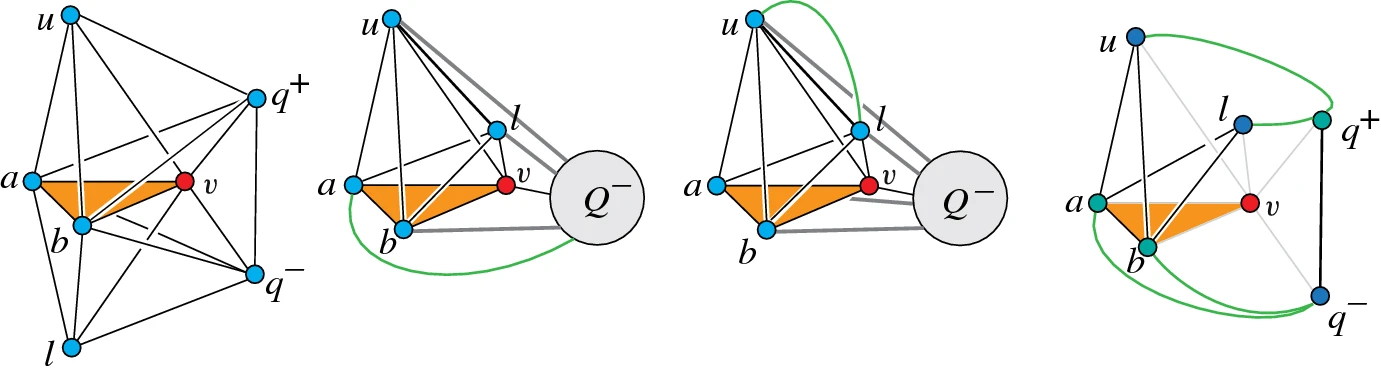

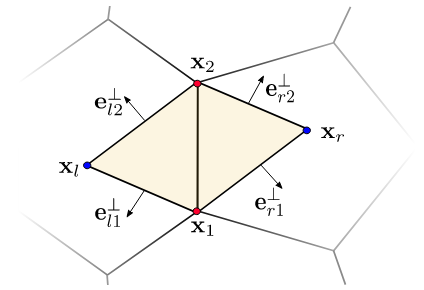

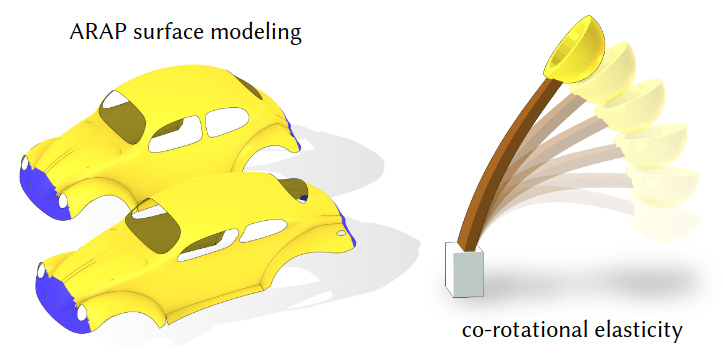

ARAP Revisited: Discretizing the Elastic Energy using Intrinsic Voronoi Cells (2023)

Computer Graphics Forum

ARAP Revisited: Discretizing the Elastic Energy using Intrinsic Voronoi Cells (2023)

Computer Graphics Forum

@article{Finnendahl:2023:AR,

author = {Finnendahl, Ugo and Schwartz, Matthias and Alexa, Marc},

title = {ARAP Revisited: Discretizing the Elastic Energy using Intrinsic Voronoi Cells},

journal = {Computer Graphics Forum},

year={2023},

volume = {43},

number = {5},

pages = {e15134},

keywords = {modelling, deformations, polygonal modelling},

doi = {https://doi.org/10.1111/cgf.14790},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.14790},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.14790},

abstract = {Abstract As-rigid-as-possible (ARAP) surface modelling is widely used for interactive deformation of triangle meshes. We show that ARAP can be interpreted as minimizing a discretization of an elastic energy based on non-conforming elements defined over dual orthogonal cells of the mesh. Using the intrinsic Voronoi cells rather than an orthogonal dual of the extrinsic mesh guarantees that the energy is non-negative over each cell. We represent the intrinsic Delaunay edges extrinsically as polylines over the mesh, encoded in barycentric coordinates relative to the mesh vertices. This modification of the original ARAP energy, which we term iARAP, remedies problems stemming from non-Delaunay edges in the original approach. Unlike the spokes-and-rims version of the ARAP approach it is less susceptible to the triangulation of the surface. We provide examples of deformations generated with iARAP and contrast them with other versions of ARAP. We also discuss the properties of the Laplace-Beltrami operator implicitly introduced with the new discretization.}

}

Tutte embeddings of tetrahedral meshes (2023)

Discrete & Computational Geometry

Tutte embeddings of tetrahedral meshes (2023)

Discrete & Computational Geometry

@article{Alexa:2024:TE,

abstract = {Tutte's embedding theorem states that every 3-connected graph without a {\$}{\$}K{\_}5{\$}{\$}- or {\$}{\$}K{\_}{\{}3,3{\}}{\$}{\$}-minor (i.e., a planar graph) is embedded in the plane if the outer face is in convex position and the interior vertices are convex combinations of their neighbors. We show that this result extends to simply connected tetrahedral meshes in a natural way: for the tetrahedral mesh to be embedded if the outer polyhedron is in convex position and the interior vertices are convex combination of their neighbors it is sufficient (but not necessary) that the graph of the tetrahedral mesh contains no {\$}{\$}K{\_}6{\$}{\$}and no {\$}{\$}K{\_}{\{}3,3,1{\}}{\$}{\$}, and all triangles incident on three boundary vertices are boundary triangles.},

author = {Alexa, Marc},

date = {2023/03/17},

doi = {10.1007/s00454-023-00494-0},

isbn = {1432-0444},

journal = {Discrete \& Computational Geometry},

title = {Tutte Embeddings of Tetrahedral Meshes},

url = {https://doi.org/10.1007/s00454-023-00494-0},

year = {2023}

}

2022

α-Functions: Piecewise-linear Approximation from Noisy and Hermite Data (2022)

ACM SIGGRAPH 2022 Conference Proceedings

α-Functions: Piecewise-linear Approximation from Noisy and Hermite Data (2022)

ACM SIGGRAPH 2022 Conference Proceedings

@inproceedings{alexa:2022:alpha_functions,

title = {$\alpha$-Functions: Piecewise-linear Approximation from Noisy and Hermite Data},

author = {Marc Alexa},

url = {https://dl.acm.org/doi/abs/10.1145/3528233.3530743},

doi = {10.1145/3528233.3530743},

year = {2022},

date = {2022-07-27},

booktitle = {ACM SIGGRAPH 2022 Conference Proceedings},

pages = {1-9},

abstract = {We introduce α-functions, providing piecewise linear approximation to given data as the difference of two convex functions. The parameter α controls the shape of a paraboloid that is probing the data and may be used to filter out noise in the data. The use of convex functions enables tools for efficient approximation to the data, adding robustness to outliers, and dealing with gradient information. It also allows using the approach in higher dimension. We show that α-functions can be efficiently computed and demonstrate their versatility at the example of surface reconstruction from noisy surface samples.},

keywords = {computer graphics, geometry processing},

pubstate = {published},

tppubtype = {inproceedings}

}

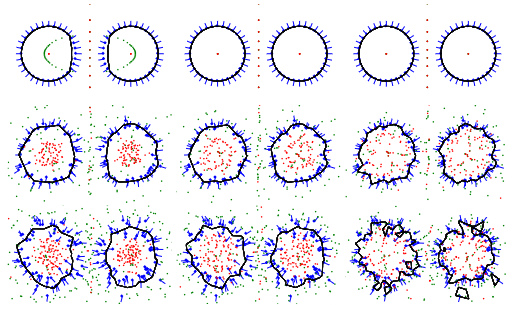

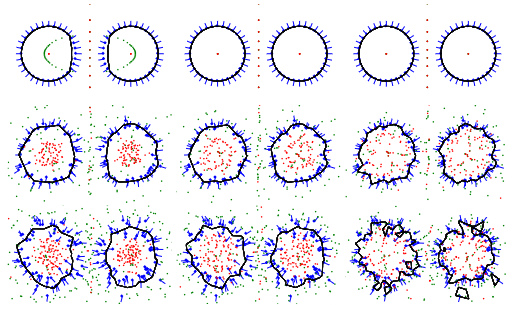

Super-Fibonacci Spirals: Fast, Low-Discrepancy Sampling of SO(3) (2022)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

Super-Fibonacci Spirals: Fast, Low-Discrepancy Sampling of SO(3) (2022)

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

@inproceedings{Alexa:2022:SFS,

title = {Super-Fibonacci Spirals: Fast, Low-Discrepancy Sampling of SO(3)},

author = {Marc Alexa},

url = {https://openaccess.thecvf.com/content/CVPR2022/html/Alexa_Super-Fibonacci_Spirals_Fast_Low-Discrepancy_Sampling_of_SO3_CVPR_2022_paper.html, CVF Open Access Version

https://marcalexa.github.io/superfibonacci/, Project on Github},

year = {2022},

date = {2022-06-01},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {8291-8300},

abstract = {Super-Fibonacci spirals are an extension of Fibonacci spirals, enabling fast generation of an arbitrary but fixed number of 3D orientations. The algorithm is simple and fast. A comprehensive evaluation comparing to other methods shows that the generated sets of orientations have low discrepancy, minimal spurious components in the power spectrum, and almost identical Voronoi volumes. This makes them useful for a variety of applications in vision, robotics, machine learning, and in particular Monte Carlo sampling.},

keywords = {scientific computing},

pubstate = {published},

tppubtype = {inproceedings}

}

Multi-View Mesh Reconstruction With Neural Deferred Shading (2022)

Multi-View Mesh Reconstruction With Neural Deferred Shading (2022)

- Markus Worchel

- Rodrigo Diaz

- Weiwen Hu

- Oliver Schreer

- Ingo Feldmann

- Peter Eisert

Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

@inproceedings{Worchel:2022:NDS,

title = {Multi-View Mesh Reconstruction With Neural Deferred Shading},

author = {Markus Worchel and Rodrigo Diaz and Weiwen Hu and Oliver Schreer and Ingo Feldmann and Peter Eisert},

url = {https://openaccess.thecvf.com/content/CVPR2022/html/Worchel_Multi-View_Mesh_Reconstruction_With_Neural_Deferred_Shading_CVPR_2022_paper.html, CVF Open Access Version

https://fraunhoferhhi.github.io/neural-deferred-shading/, Project Page

https://github.com/fraunhoferhhi/neural-deferred-shading, Code},

year = {2022},

date = {2022-06-01},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {6187-6197},

abstract = {We propose an analysis-by-synthesis method for fast multi-view 3D reconstruction of opaque objects with arbitrary materials and illumination. State-of-the-art methods use both neural surface representations and neural rendering. While flexible, neural surface representations are a significant bottleneck in optimization runtime. Instead, we represent surfaces as triangle meshes and build a differentiable rendering pipeline around triangle rasterization and neural shading. The renderer is used in a gradient descent optimization where both a triangle mesh and a neural shader are jointly optimized to reproduce the multi-view images. We evaluate our method on a public 3D reconstruction dataset and show that it can match the reconstruction accuracy of traditional baselines and neural approaches while surpassing them in optimization runtime. Additionally, we investigate the shader and find that it learns an interpretable representation of appearance, enabling applications such as 3D material editing.},

keywords = {3D reconstruction, computer graphics, computer vision, differentiable rendering, machine learning, neural rendering},

pubstate = {published},

tppubtype = {inproceedings}

}

2021

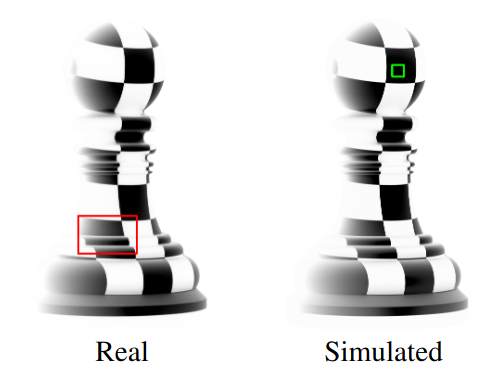

Hardware Design and Accurate Simulation of Structured-Light Scanning for Benchmarking of 3D Reconstruction Algorithms (2021)

Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021)

Hardware Design and Accurate Simulation of Structured-Light Scanning for Benchmarking of 3D Reconstruction Algorithms (2021)

Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021)

@incollection{Koch:2021:HDA,

title = {Hardware Design and Accurate Simulation of Structured-Light Scanning for Benchmarking of 3D Reconstruction Algorithms},

author = {Sebastian Koch and Yurii Piadyk and Markus Worchel and Marc Alexa and Claudio Silva and Denis Zorin and Daniele Panozzo},

url = {https://geometryprocessing.github.io/scanner-sim, Project Page},

year = {2021},

date = {2021-10-10},

booktitle = {Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021)},

issuetitle = {Datasets and Benchmarks Track},

abstract = {Images of a real scene taken with a camera commonly differ from synthetic images of a virtual replica of the same scene, despite advances in light transport simulation and calibration. By explicitly co-developing the Structured-Light Scanning (SLS) hardware and rendering pipeline we are able to achieve negligible per-pixel difference between the real image and the synthesized image on geometrically complex calibration objects with known material properties. This approach provides an ideal test-bed for developing and evaluating data-driven algorithms in the area of 3D reconstruction, as the synthetic data is indistinguishable from real data and can be generated at large scale by simulation. We propose three benchmark challenges using a combination of acquired and synthetic data generated with our system: (1) a denoising benchmark tailored to structured-light scanning, (2) a shape completion benchmark to fill in missing data, and (3) a benchmark for surface reconstruction from dense point clouds. Besides, we provide a large collection of high-resolution scans that allow to use our system and benchmarks without reproduction of the hardware setup on our website},

howpublished = {https://openreview.net/forum?id=bNL5VlTfe3p},

keywords = {computer graphics},

pubstate = {published},

tppubtype = {incollection}

}

The Diamond Laplace for Polygonal and Polyhedral Meshes (2021)

Computer Graphics Forum

The Diamond Laplace for Polygonal and Polyhedral Meshes (2021)

Computer Graphics Forum

@article{Bunge:DL:2021,

title = {The Diamond Laplace for Polygonal and Polyhedral Meshes},

author = {Astrid Bunge and Mario Botsch and Marc Alexa},

url = {https://www.youtube.com/watch?v=i7mYiJSG2ss, Talk (YouTube)},

doi = {10.1111/cgf.14369},

year = {2021},

date = {2021-08-23},

journal = {Computer Graphics Forum},

volume = {40},

number = {5},

pages = {217-230},

abstract = {We introduce a construction for discrete gradient operators that can be directly applied to arbitrary polygonal surface as well as polyhedral volume meshes. The main idea is to associate the gradient of functions defined at vertices of the mesh with diamonds: the region spanned by a dual edge together with its corresponding primal element — an edge for surface meshes and a face for volumetric meshes. We call the operator resulting from taking the divergence of the gradient Diamond Laplacian. Additional vertices used for the construction are represented as affine combinations of the original vertices, so that the Laplacian operator maps from values at vertices to values at vertices, as is common in geometry processing applications. The construction is local, exactly the same for all types of meshes, and results in a symmetric negative definite operator with linear precision. We show that the accuracy of the Diamond Laplacian is similar or better compared to other discretizations. The greater versatility and generally good behavior come at the expense of an increase in the number of non-zero coefficients that depends on the degree of the mesh elements.},

keywords = {computer graphics, geometry processing},

pubstate = {published},

tppubtype = {article}

}

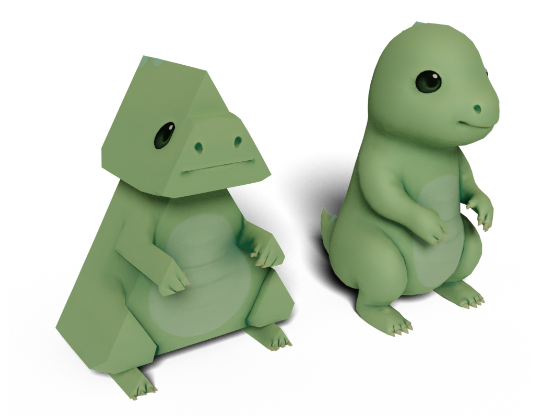

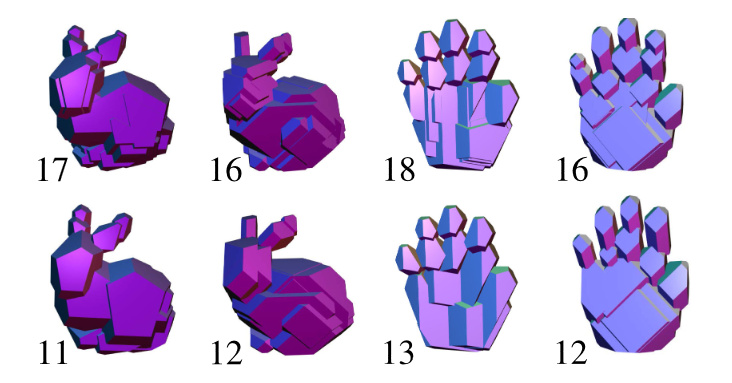

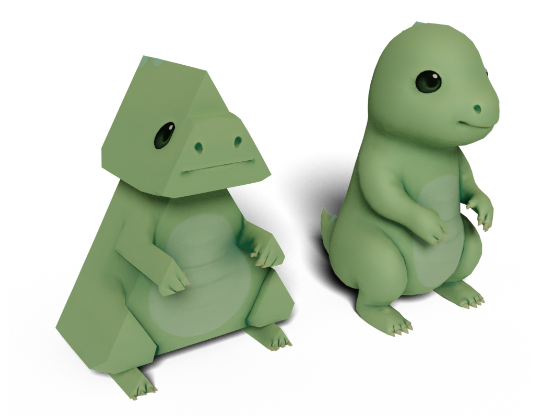

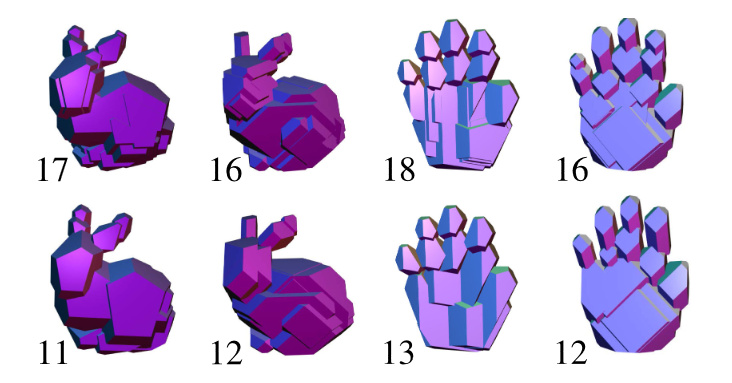

Gauss Stylization: Interactive Artistic Mesh Modeling based on Preferred Surface Normals (2021)

Computer Graphics Forum

Gauss Stylization: Interactive Artistic Mesh Modeling based on Preferred Surface Normals (2021)

Computer Graphics Forum

@article{Kohlbrenner2021,

title = {Gauss Stylization: Interactive Artistic Mesh Modeling based on Preferred Surface Normals},

author = {Max Kohlbrenner and Ugo Finnendahl and Tobias Djuren and Marc Alexa},

url = {https://cybertron.cg.tu-berlin.de/projects/gaussStylization/},

doi = {https://doi.org/10.1111/cgf.14355},

year = {2021},

date = {2021-08-23},

journal = {Computer Graphics Forum},

volume = {40},

number = {5},

pages = {33-43},

abstract = {Abstract Extending the ARAP energy with a term that depends on the face normal, energy minimization becomes an effective stylization tool for shapes represented as meshes. Our approach generalizes the possibilities of Cubic Stylization: the set of preferred normals can be chosen arbitrarily from the Gauss sphere, including semi-discrete sets to model preference for cylinder- or cone-like shapes. The optimization is designed to retain, similar to ARAP, the constant linear system in the global optimization. This leads to convergence behavior that enables interactive control over the parameters of the optimization. We provide various examples demonstrating the simplicity and versatility of the approach.},

keywords = {computer graphics, geometric stylization, geometry processing, non-photorealistic rendering, shape modeling},

pubstate = {published},

tppubtype = {article}

}

Fast Updates for Least-Squares Rotational Alignment (2021)

Computer Graphics Forum

Fast Updates for Least-Squares Rotational Alignment (2021)

Computer Graphics Forum

@article{Zhang:FRA:2021,

title = {Fast Updates for Least-Squares Rotational Alignment},

author = {Jiayi Eris Zhang and Alec Jacobson and Marc Alexa},

url = {https://www.dgp.toronto.edu/projects/fast-rotation-fitting/, Project Page},

doi = {10.1111/cgf.142611},

year = {2021},

date = {2021-06-04},

journal = {Computer Graphics Forum},

volume = {40},

number = {2},

pages = {12-22},

abstract = {Across computer graphics, vision, robotics and simulation, many applications rely on determining the 3D rotation that aligns two objects or sets of points. The standard solution is to use singular value decomposition (SVD), where the optimal rotation is recovered as the product of the singular vectors. Faster computation of only the rotation is possible using suitable parameterizations of the rotations and iterative optimization. We propose such a method based on the Cayley transformations. The resulting optimization problem allows better local quadratic approximation compared to the Taylor approximation of the exponential map. This results in both faster convergence as well as more stable approximation compared to other iterative approaches. It also maps well to AVX vectorization. We compare our implementation with a wide range of alternatives on real and synthetic data. The results demonstrate up to two orders of magnitude of speedup compared to a straightforward SVD implementation and a 1.5-6 times speedup over popular optimized code.},

keywords = {computer graphics, geometric mechanics},

pubstate = {published},

tppubtype = {article}

}

PolyCover: Shape Approximating With Discrete Surface Orientation (2021)

IEEE Computer Graphics and Applications

PolyCover: Shape Approximating With Discrete Surface Orientation (2021)

IEEE Computer Graphics and Applications

@article{Alexa:PC:2021,

title = {PolyCover: Shape Approximating With Discrete Surface Orientation},

author = {Marc Alexa},

doi = {10.1109/MCG.2021.3060946},

issn = {0272-1716},

year = {2021},

date = {2021-02-22},

journal = {IEEE Computer Graphics and Applications},

volume = {41},

number = {3},

pages = {85-95},

abstract = {We consider the problem of approximating given shapes so that the surface normals are restricted to a prescribed discrete set. Such shape approximations are commonly required in the context of manufacturing shapes. We provide an algorithm that first computes maximal interior polytopes and, then, selects a subset of offsets from the interior polytopes that cover the shape. This provides prescribed Hausdorff error approximations that use only a small number of primitives.},

keywords = {computer graphics, geometry processing, shape approximation},

pubstate = {published},

tppubtype = {article}

}

2020

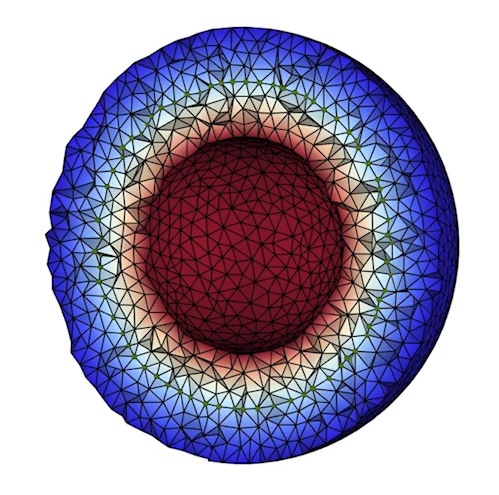

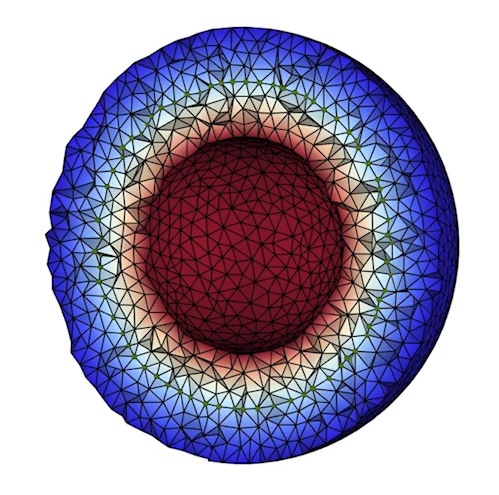

Conforming Weighted Delaunay Triangulation (2020)

ACM Transactions on Graphics

Conforming Weighted Delaunay Triangulation (2020)

ACM Transactions on Graphics

@article{Alexa:CWD:2020,

title = {Conforming Weighted Delaunay Triangulation},

author = {Marc Alexa},

url = {https://www.cg.tu-berlin.de/research/projects/cwdt/, Project page

https://dl.acm.org/doi/10.1145/3414685.3417776?cid=81100235480, ACM-authorized paper},

doi = {10.1145/3414685.3417776},

year = {2020},

date = {2020-12-06},

journal = {ACM Transactions on Graphics},

volume = {39},

number = {6},

pages = {248},

abstract = {Given a set of points together with a set of simplices

we show how to compute weights associated with the points such that the weighted Delaunay triangulation of the point set contains the simplices, if possible. For a given triangulated surface, this process provides a tetrahedral mesh conforming to the triangulation, i.e. solves the problem of meshing the triangulated surface without inserting additional vertices. The restriction to weighted Delaunay triangulations ensures that the orthogonal dual mesh is embedded, facilitating common geometry processing tasks.

We show that the existence of a single simplex in a weighted Delaunay triangulation for given vertices amounts to a set of linear inequalities, one for each vertex. This means that the number of inequalities for a given triangle mesh is quadratic in the number of mesh elements, making the naive approach impractical. We devise an algorithm that incrementally selects a small subset of inequalities, repeatedly updating the weights, until the weighted Delaunay triangulation contains all constrained simplices or the problem becomes infeasible. Applying this algorithm to a range of triangle meshes commonly used graphics demonstrates that many of them admit a conforming weighted Delaunay triangulation, in contrast to conforming or constrained Delaunay that require additional vertices to split the input primitives.},

keywords = {computer graphics, geometry processing, laplacian},

pubstate = {published},

tppubtype = {article}

}

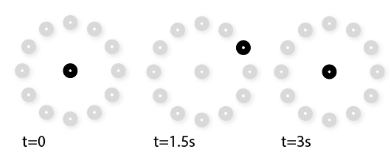

Computational discrimination between natural images based on gaze during mental imagery (2020)

Scientific Reports

Computational discrimination between natural images based on gaze during mental imagery (2020)

Scientific Reports

@article{Wang:2020:CD,

title = {Computational discrimination between natural images based on gaze during mental imagery},

author = {Xi Wang and Andreas Ley and Sebastian Koch and James Hays and Kenneth Holmqvist and Marc Alexa},

url = {https://rdcu.be/b6tel, Article

http://cybertron.cg.tu-berlin.de/xiwang/mental_imagery/retrieval.html, Related Project},

doi = {10.1038/s41598-020-69807-0},

issn = {2045-2322},

year = {2020},

date = {2020-08-03},

journal = {Scientific Reports},

volume = {10},

pages = {13035},

abstract = {When retrieving image from memory, humans usually move their eyes spontaneously as if the image were in front of them. Such eye movements correlate strongly with the spatial layout of the recalled image content and function as memory cues facilitating the retrieval procedure. However, how close the correlation is between imagery eye movements and the eye movements while looking at the original image is unclear so far. In this work we first quantify the similarity of eye movements between recalling an image and encoding the same image, followed by the investigation on whether comparing such pairs of eye movements can be used for computational image retrieval. Our results show that computational image retrieval based on eye movements during spontaneous imagery is feasible. Furthermore, we show that such a retrieval approach can be generalized to unseen images.},

keywords = {HCI, mental imagery, Visual attention},

pubstate = {published},

tppubtype = {article}

}

Properties of Laplace Operators for Tetrahedral Meshes (2020)

Computer Graphics Forum

Properties of Laplace Operators for Tetrahedral Meshes (2020)

Computer Graphics Forum

@article{Alexa:2020:PLO,

title = {Properties of Laplace Operators for Tetrahedral Meshes},

author = {Marc Alexa and Philipp Herholz and Maximilian Kohlbrenner and Olga Sorkine},

url = {https://igl.ethz.ch/projects/LB3D/, Project Page},

doi = {10.1111/cgf.14068},

year = {2020},

date = {2020-07-06},

journal = {Computer Graphics Forum},

volume = {39},

number = {5},

pages = {55-68},

abstract = {Discrete Laplacians for triangle meshes are a fundamental tool in geometry processing. The so-called cotan Laplacian is widely used since it preserves several important properties of its smooth counterpart. It can be derived from different principles: either considering the piecewise linear nature of the primal elements or associating values to the dual vertices. Both approaches lead to the same operator in the two-dimensional setting. In contrast, for tetrahedral meshes, only the primal construction is reminiscent of the cotan weights, involving dihedral angles. We provide explicit formulas for the lesser-known dual construction. In both cases, the weights can be computed by adding the contributions of individual tetrahedra to an edge. The resulting two different discrete Laplacians for tetrahedral meshes only retain some of the properties of their two-dimensional counterpart. In particular, while both constructions have linear precision, only the primal construction is positive semi-definite and only the dual construction generates positive weights and provides a maximum principle for Delaunay meshes. We perform a range of numerical experiments that highlight the benefits and limitations of the two constructions for different problems and meshes.},

keywords = {computer graphics, geometry processing, laplacian},

pubstate = {published},

tppubtype = {article}

}

2019

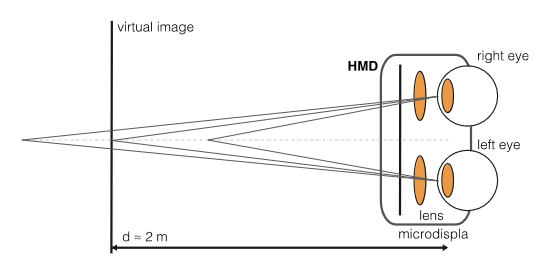

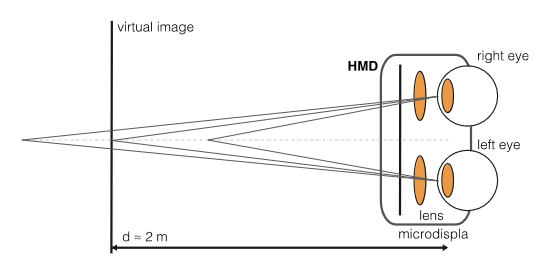

Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort (2019)

ACM Trans. Appl. Percept.

Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort (2019)

ACM Trans. Appl. Percept.

@article{DynamicRendering,

title = {Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort},

author = {Jochen Jacobs and Xi Wang and Marc Alexa},

url = {https://dl.acm.org/citation.cfm?id=3353902, Article

https://dl.acm.org/authorize?N682026, ACM Authorized Paper

},

doi = {10.1145/3353902},

year = {2019},

date = {2019-09-09},

journal = {ACM Trans. Appl. Percept. },

volume = {16},

number = {3},

pages = {16},

abstract = {Head-mounted displays cause discomfort. This is commonly attributed to conflicting depth cues, most prominently between vergence, which is consistent with object depth, and accommodation, which is adjusted to the near eye displays.

It is possible to adjust the camera parameters, specifically interocular distance and vergence angles, for rendering the virtual environment to minimize this conflict. This requires dynamic adjustment of the parameters based on object depth. In an experiment based on a visual search task, we evaluate how dynamic adjustment affects visual comfort compared to fixed camera parameters. We collect objective as well as subjective data. Results show that dynamic adjustment decreases common objective measures of visual comfort such as pupil diameter and blink rate by a statistically significant margin. The subjective evaluation of categories such as fatigue or eye irritation shows a similar trend but was inconclusive. This suggests that rendering with fixed camera parameters is the better choice for head-mounted displays, at least in scenarios similar to the ones used here.},

keywords = {dynamic rendering, vergence, Virtual reality},

pubstate = {published},

tppubtype = {article}

}

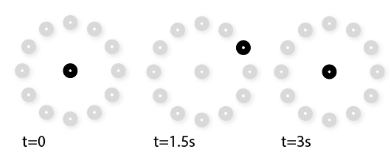

The mean point of vergence is biased under projection (2019)

Journal of Eye Movement Research

The mean point of vergence is biased under projection (2019)

Journal of Eye Movement Research

@article{jemr2019,

title = {The mean point of vergence is biased under projection},

author = {Xi Wang and Kenneth Holmqvist and Marc Alexa},

url = {https://bop.unibe.ch/JEMR/article/view/JEMR.12.4.2},

doi = {https://doi.org/10.16910/jemr.12.4.2},

issn = {1995-8692},

year = {2019},

date = {2019-09-09},

journal = {Journal of Eye Movement Research},

volume = {12},

number = {4},

abstract = {The point of interest in three-dimensional space in eye tracking is often computed based on intersecting the lines of sight with geometry, or finding the point closest to the two lines of sight. We first start by theoretical analysis with synthetic simulations. We show that the mean point of vergence is generally biased for centrally symmetric errors and that the bias depends on the horizontal vs. vertical error distribution of the tracked eye positions. Our analysis continues with an evaluation on real experimental data. The error distributions seem to be different among individuals but they generally leads to the same bias towards the observer. And it tends to be larger with an increased viewing distance. We also provided a recipe to minimize the bias, which applies to general computations of eye ray intersection. These findings not only have implications for choosing the calibration method in eye tracking experiments and interpreting the observed eye movements data; but also suggest to us that we shall consider the mathematical models of calibration as part of the experiment.},

keywords = {eye tracking, vergence},

pubstate = {published},

tppubtype = {article}

}

Computational discrimination between natural images based on gaze during mental imagery (2019)

Presented at 20th European Conference on Eye Morvements (ECEM)

Computational discrimination between natural images based on gaze during mental imagery (2019)

Presented at 20th European Conference on Eye Morvements (ECEM)

@misc{ecem19,

title = {Computational discrimination between natural images based on gaze during mental imagery},

author = {Xi Wang and Kenneth Holmqvist and Marc Alexa},

url = {http://ecem2019.com/media/attachments/2019/08/27/ecem_abstract_book_updated.pdf},

year = {2019},

date = {2019-08-18},

abstract = {The term “looking-at-nothing” describes the phenomenon that humans move their eyes when looking in front of an empty space. Previous studies showed that eye movements during mental imagery while looking to nothing play a functional role in memory retrieval. However, they are not a reinstatement of the eye movements while looking at the visual stimuli and are generally distorted due to the lack of reference in front of an empty space. So far it remains unclear what the degree of similarity is between eye movements during encoding and eye movements during recall.

We studied the mental imagery eye movements while looking at nothing in a lab-controlled experiment. 100 natural images were viewed and recalled by 28 observers, following the standard looking-at-nothing paradigm. We compared the basic characteristics of eye movements during both encoding and recall. Furthermore, we studies the similarity of eye movements between two conditions by asking the question: how visual imagery eye movements can be employed for computational image retrieval. Our results showed that gaze patterns in both conditions can be used to retrieve the corresponding visual stimuli. By utilizing the similarity between gaze patterns during encoding and those during recall, we showed that it is possible to generalize to new images. This study quantitatively compared the similarity between eye movements during looking at the images and those during recall them, and offers a solid method for future studies on the looking-at-nothing phenomenon. },

howpublished = {Presented at 20th European Conference on Eye Morvements (ECEM)},

keywords = {eye tracking, image retrieval, mental imagery},

pubstate = {published},

tppubtype = {misc}

}

CurviSlicer: slightly curved slicing for 3-axis printers (2019)

CurviSlicer: slightly curved slicing for 3-axis printers (2019)

- Jimmy Etienne

- Nicolas Ray

- Daniele Panozzo

- Samuel Hornus

- Charlie C. L. Wang

- Jonas Martinez

- Sara McMains

- Marc Alexa

- Brian Wyvill

- Sylvain Lefebvre

ACM Transactions on Graphics

@article{Etienne:2019:CS,

title = {CurviSlicer: slightly curved slicing for 3-axis printers},

author = {Jimmy Etienne and Nicolas Ray and Daniele Panozzo and Samuel Hornus and Charlie C. L. Wang and Jonas Martinez and Sara McMains and Marc Alexa and Brian Wyvill and Sylvain Lefebvre},

url = {https://dl.acm.org/authorize?N681473, ACM Authorized Article

},

doi = {10.1145/3306346.3323022},

year = {2019},

date = {2019-07-04},

journal = {ACM Transactions on Graphics},

volume = {38},

number = {4},

abstract = {Most additive manufacturing processes fabricate objects by stacking planar layers of solidified material. As a result, produced parts exhibit a so-called staircase effect, which results from sampling slanted surfaces with parallel planes. Using thinner slices reduces this effect, but it always remains visible where layers almost align with the input surfaces.

In this research we exploit the ability of some additive manufacturing processes to deposit material slightly out of plane to dramatically reduce these artifacts. We focus in particular on the widespread Fused Filament Fabrication (FFF) technology, since most printers in this category can deposit along slightly curved paths, under deposition slope and thickness constraints.

Our algorithm curves the layers, making them either follow the natural slope of the input surface or on the contrary, make them intersect the surfaces at a steeper angle thereby improving the sampling quality. Rather than directly computing curved layers, our algorithm optimizes for a deformation of the model which is then sliced with a standard planar approach. We demonstrate that this approach enables us to encode all fabrication constraints, including the guarantee of generating collision-free toolpaths, in a convex optimization that can be solved using a QP solver.

We produce a variety of models and compare print quality between curved deposition and planar slicing.},

keywords = {Digital manufacturing},

pubstate = {published},

tppubtype = {article}

}

Harmonic Triangulations (2019)

ACM Transactions on Graphics

Harmonic Triangulations (2019)

ACM Transactions on Graphics

@article{Alexa:2019:HT,

title = {Harmonic Triangulations},

author = {Marc Alexa},

url = {https://www.cg.tu-berlin.de/harmonic-triangulations/, Project Page

https://dl.acm.org/authorize?N688246, ACM Authorized Paper},

doi = {10.1145/3306346.3322986},

year = {2019},

date = {2019-07-03},

journal = {ACM Transactions on Graphics},

volume = {38},

number = {4},

pages = {54},

abstract = {We introduce the notion of harmonic triangulations: a harmonic triangulation simultaneously minimizes the Dirichlet energy of all piecewise linear functions. By a famous result of Rippa, Delaunay triangulations are the harmonic triangulations of planar point sets. We prove by explicit counterexample that in 3D a harmonic triangulation does not exist in general. However, we show that bistellar flips are harmonic: if they decrease Dirichlet energy for one set of function values, they do so for all. This observation gives rise to the notion of locally harmonic triangulations. We demonstrate that locally harmonic triangulations can be efficiently computed, and efficiently reduce sliver tetrahedra. The notion of harmonic triangulation also gives rise to a scalar measure of the quality of a triangulation, which can be used to prioritize flips and optimize the position of vertices. Tetrahedral meshes generated by optimizing this function generally show better quality than Delaunay-based optimization techniques. },

keywords = {computer graphics geometry},

pubstate = {published},

tppubtype = {article}

}

The Mental Image Revealed by Gaze Tracking (2019)

The Mental Image Revealed by Gaze Tracking (2019)

- Xi Wang

- Andreas Ley

- Sebastian Koch

- David Lindlbauer

- James Hays

- Kenneth Holmqvist

- Marc Alexa

Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)

@conference{mentalImg,

title = {The Mental Image Revealed by Gaze Tracking},

author = {Xi Wang and Andreas Ley and Sebastian Koch and David Lindlbauer and James Hays and Kenneth Holmqvist and Marc Alexa},

url = {http://cybertron.cg.tu-berlin.de/xiwang/mental_imagery/retrieval.html, Project page

https://dl.acm.org/authorize?N681045, ACM Authorized Paper

},

doi = {10.1145/3290605.3300839},

isbn = {978-1-4503-5970-2},

year = {2019},

date = {2019-05-04},

booktitle = {Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)

},

publisher = {ACM},

abstract = {Humans involuntarily move their eyes when retrieving an image from memory. This motion is often similar to actually observing the image. We suggest to exploit this behavior as a new modality in human computer interaction, using the motion of the eyes as a descriptor of the image. Interaction requires the user's eyes to be tracked but no voluntary physical activity. We perform a controlled experiment and develop matching techniques using machine learning to investigate if images can be discriminated based on the gaze patterns recorded while users merely think about image. Our results indicate that image retrieval is possible with an accuracy significantly above chance. We also show that this result generalizes to images not used during training of the classifier and extends to uncontrolled settings in a realistic scenario.},

keywords = {eye tracking, image retrieval},

pubstate = {published},

tppubtype = {conference}

}

Understanding Metamaterial Mechanisms (2019)

Understanding Metamaterial Mechanisms (2019)

- Alexandra Ion

- David Lindlbauer

- Philipp Herholz

- Marc Alexa

- Patrick Baudisch

Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)

@conference{Ion:UMM:2019,

title = {Understanding Metamaterial Mechanisms},

author = {Alexandra Ion and David Lindlbauer and Philipp Herholz and Marc Alexa and Patrick Baudisch},

url = {https://dl.acm.org/authorize?N681474, ACM Authorized Paper},

doi = {10.1145/3290605.3300877},

year = {2019},

date = {2019-05-01},

booktitle = {Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)},

publisher = {ACM},

abstract = {In this paper, we establish the underlying foundations of mechanisms that are composed of cell structures---known as metamaterial mechanisms. Such metamaterial mechanisms were previously shown to implement complete mechanisms in the cell structure of a 3D printed material, without the need for assembly. However, their design is highly challenging. A mechanism consists of many cells that are interconnected and impose constraints on each other. This leads to unobvious and non-linear behavior of the mechanism, which impedes user design. In this work, we investigate the underlying topological constraints of such cell structures and their influence on the resulting mechanism. Based on these findings, we contribute a computational design tool that automatically creates a metamaterial mechanism from user-defined motion paths. This tool is only feasible because our novel abstract representation of the global constraints highly reduces the search space of possible cell arrangements.},

keywords = {Digital manufacturing, fabrication},

pubstate = {published},

tppubtype = {conference}

}

ABC: A Big CAD Model Dataset for Geometric Deep Learning (2019)

ABC: A Big CAD Model Dataset for Geometric Deep Learning (2019)

- Sebastian Koch

- Albert Matveev

- Zhongshi Jiang

- Francis Williams Alexey Artemov

- Evgeny Burnaev

- Marc Alexa

- Denis Zorin

- Daniele Panozzo

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition

@conference{Koch:2019:ABC,

title = {ABC: A Big CAD Model Dataset for Geometric Deep Learning},

author = {Sebastian Koch and Albert Matveev and Zhongshi Jiang and Francis Williams Alexey Artemov and Evgeny Burnaev and Marc Alexa and Denis Zorin and Daniele Panozzo},

url = {http://openaccess.thecvf.com/content_CVPR_2019/html/Koch_ABC_A_Big_CAD_Model_Dataset_for_Geometric_Deep_Learning_CVPR_2019_paper.html, Article at CVF},

year = {2019},

date = {2019-05-01},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages = {9601--9611},

publisher = {IEEE},

abstract = {We introduce ABC-Dataset, a collection of one million Computer-Aided Design (CAD) models for research of geometric deep learning methods and applications. Each model is a collection of explicitly parametrized curves and surfaces, providing ground truth for differential quantities, patch segmentation, geometric feature detection, and shape reconstruction. Sampling the parametric descriptions of surfaces and curves allows generating data in different formats and resolutions, enabling fair comparisons for a wide range of geometric learning algorithms. As a use case for our dataset, we perform a large-scale benchmark for estimation of surface normals, comparing existing data driven methods and evaluating their performance against both the ground truth and traditional normal estimation methods.},

keywords = {Digital manufacturing, geometry processing, polygonal meshes, shape retrieval},

pubstate = {published},

tppubtype = {conference}

}

Efficient Computation of Smoothed Exponential Maps (2019)

Computer Graphics Forum

Efficient Computation of Smoothed Exponential Maps (2019)

Computer Graphics Forum

@article{Herholz:2019:CGF,

title = {Efficient Computation of Smoothed Exponential Maps},

author = {Philipp Herholz and Marc Alexa},

doi = {10.1111/cgf.13607},

year = {2019},

date = {2019-03-14},

journal = {Computer Graphics Forum},

volume = {38},

pages = {79--90},

abstract = {Many applications in geometry processing require the computation of local parameterizations on a surface mesh at interactive rates. A popular approach is to compute local exponential maps, i.e. parameterizations that preserve distance and angle to the origin of the map. We extend the computation of geodesic distance by heat diffusion to also determine angular information for the geodesic curves. This approach has two important benefits compared to fast approximate as well as exact forward tracing of the distance function: First, it allows generating smoother maps, avoiding discontinuities. Second, exploiting the factorization of the global Laplace–Beltrami operator of the mesh and using recent localized solution techniques, the computation is more efficient even compared to fast approximate solutions based on Dijkstra's algorithm.},

keywords = {geometry processing},

pubstate = {published},

tppubtype = {article}

}

Center of circle after perspective transformation (2019)

arXiv preprint arXiv:1902.04541

Center of circle after perspective transformation (2019)

arXiv preprint arXiv:1902.04541

@online{concentricCircles,

title = {Center of circle after perspective transformation},

author = {Xi Wang and Albert Chern and Marc Alexa},

url = {https://arxiv.org/abs/1902.04541},

year = {2019},

date = {2019-02-12},

organization = {arXiv preprint arXiv:1902.04541 },

abstract = {Video-based glint-free eye tracking commonly estimates gaze direction based on the pupil center. The boundary of the pupil is fitted with an ellipse and the euclidean center of the ellipse in the image is taken as the center of the pupil. However, the center of the pupil is generally not mapped to the center of the ellipse by the projective camera transformation. This error resulting from using a point that is not the true center of the pupil directly affects eye tracking accuracy. We investigate the underlying geometric problem of determining the center of a circular object based on its projective image. The main idea is to exploit two concentric circles -- in the application scenario these are the pupil and the iris. We show that it is possible to computed the center and the ratio of the radii from the mapped concentric circles with a direct method that is fast and robust in practice. We evaluate our method on synthetically generated data and find that it improves systematically over using the center of the fitted ellipse. Apart from applications of eye tracking we estimate that our approach will be useful in other tracking applications.},

keywords = {eye tracking},

pubstate = {published},

tppubtype = {online}

}

2018

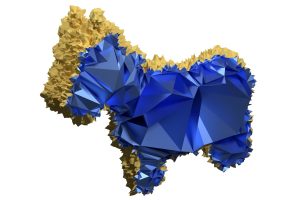

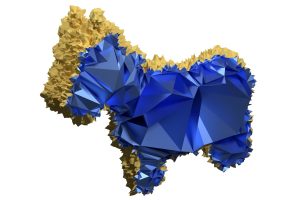

Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny? (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny? (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

@article{Gaze3D,

title = {Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny?},

author = {Xi Wang and Sebastian Koch and Kenneth Holmqvist and Marc Alexa},

url = {http://cybertron.cg.tu-berlin.de/xiwang/project_saliency/3D_dataset.html, Project page

https://dl.acm.org/authorize?N688247, ACM Authorized Paper},

doi = {10.1145/3272127.3275094},

year = {2018},

date = {2018-12-03},

booktitle = {ACM Transaction on Graphics (Proc. of Siggraph Asia)},

journal = {ACM Transactions on Graphics},

volume = {37},

number = {6},

publisher = {ACM},

abstract = {We provide the first large dataset of human fixations on physical 3D objects presented in varying viewing conditions and made of different materials. Our experimental setup is carefully designed to allow for accurate calibration and measurement. We estimate a mapping from the pair of pupil positions to 3D coordinates in space and register the presented shape with the eye tracking setup. By modeling the fixated positions on 3D shapes as a probability distribution, we analysis the similarities among different conditions. The resulting data indicates that salient features depend on the viewing direction. Stable features across different viewing directions seem to be connected to semantically meaningful parts. We also show that it is possible to estimate the gaze density maps from view dependent data. The dataset provides the necessary ground truth data for computational models of human perception in 3D.},

keywords = {eye tracking, Visual Saliency},

pubstate = {published},

tppubtype = {article}

}

Factor Once: Reusing Cholesky Factorizations on Sub-Meshes (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

Factor Once: Reusing Cholesky Factorizations on Sub-Meshes (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

@article{Herholz:2018,

title = {Factor Once: Reusing Cholesky Factorizations on Sub-Meshes},

author = {Philipp Herholz and Marc Alexa},

url = {https://dl.acm.org/authorize?N688248, ACM Authorized Paper},

doi = {10.1145/3272127.3275107},

year = {2018},

date = {2018-12-01},

booktitle = {ACM Transaction on Graphics (Proc. of Siggraph Asia)},

journal = {ACM Transaction on Graphics},

volume = {37},

number = {6},

publisher = {ACM},

abstract = {A common operation in geometry processing is solving symmetric and positive semi-definite systems on a subset of a mesh with conditions for the vertices at the boundary of the region. This is commonly done by setting up the linear system for the sub-mesh, factorizing the system (potentially applying preordering to improve sparseness of the factors), and then solving by back-substitution. This approach suffers from a comparably high setup cost for each local operation. We propose to reuse factorizations defined on the full mesh to solve linear problems on sub-meshes. We show how an update on sparse matrices can be performed in a particularly efficient way to obtain the factorization of the operator on a sun-mesh significantly outperforming general factor updates and complete refactorization. We analyze the resulting speedup for a variety of situations and demonstrate that our method outperforms factorization of a new matrix by a factor of up to 10 while never being slower in our experiments.},

keywords = {geometry processing, linear solvers},

pubstate = {published},

tppubtype = {article}

}

Maps of Visual Importance: What is recalled from visual episodic memory? (2018)

Presented at 41st European Conference on Visual Perception (ECVP)

Maps of Visual Importance: What is recalled from visual episodic memory? (2018)

Presented at 41st European Conference on Visual Perception (ECVP)

@misc{Eyetrackingb,

title = {Maps of Visual Importance: What is recalled from visual episodic memory?},

author = {Xi Wang and Kenneth Holmqvist and Marc Alexa},

year = {2018},

date = {2018-08-26},

abstract = {It has been shown that not all fixated locations in a scene are encoded in visual memory. We propose a new way to probe experimentally whether the scene content corresponding to a fixation was considered important by the observer. Our protocol is based on findings from mental imagery showing that fixation locations are reenacted during recall. We track observers' eye movements during stimulus presentation and subsequently, observers are asked to recall the visual content while looking at a neutral background. The tracked gaze locations from the two conditions are aligned using an novel elastic matching algorithm. Motivated by the hypothesis that visual content is recalled only if it has been encoded, we filter fixations from the presentation phase based on fixation locations from recall. The resulting density maps encode fixated scene elements that observers remembered, indicating importance of scene elements. We find that these maps contain topdown rather than bottom-up features.},

howpublished = {Presented at 41st European Conference on Visual Perception (ECVP)},

keywords = {eye tracking},

pubstate = {published},

tppubtype = {misc}

}

Design and analysis of directional front projection screens (2018)

Design and analysis of directional front projection screens (2018)

- Michal Piovarci

- Michael Wessely

- Michal Jagielski

- Marc Alexa

- Wojciech Matusik

- Piotr Didyk

Computers & Graphics

@article{Piovarci:2018:DAD,

title = {Design and analysis of directional front projection screens},

author = {Michal Piovarci and Michael Wessely and Michal Jagielski and Marc Alexa and Wojciech Matusik and Piotr Didyk},

doi = {https://doi.org/10.1016/j.cag.2018.05.010},

issn = {0097-8493},

year = {2018},

date = {2018-08-01},

journal = {Computers & Graphics},

volume = {74},

pages = {213-224},

keywords = {computer graphics, fabrication},

pubstate = {published},

tppubtype = {article}

}

Remixed Reality: Manipulating Space and Time in Augmented Reality (2018)

Remixed Reality: Manipulating Space and Time in Augmented Reality (2018)

- David Lindlbauer

- Andy D. Wilson

CHI 2018

@inproceedings{Lindlbauer2018,

title = {Remixed Reality: Manipulating Space and Time in Augmented Reality},

author = {David Lindlbauer and Andy D. Wilson},

url = {https://dl.acm.org/authorize?N653481, Paper

https://www.youtube.com/watch?v=BjhaZi1l-hY, Preview video

https://www.youtube.com/watch?v=GoSQTPfrdCc, Video},

doi = {10.1145/3173574.3173703},

isbn = {978-1-4503-5620-6},

year = {2018},

date = {2018-04-22},

booktitle = {CHI 2018},

publisher = {ACM},

series = {CHI'18},

abstract = {We present Remixed Reality, a novel form of mixed reality. In contrast to classical mixed reality approaches where users see a direct view or video feed of their environment, with Remixed Reality they see a live 3D reconstruction, gathered from multiple external depth cameras. This approach enables changing the environment as easily as geometry can be changed in virtual reality, while allowing users to view and interact with the actual physical world as they would in augmented reality. We characterize a taxonomy of manipulations that are possible with Remixed Reality: spatial changes such as erasing objects; appearance changes such as changing textures; temporal changes such as pausing time; and viewpoint changes that allow users to see the world from different points without changing their physical location. We contribute a method that uses an underlying voxel grid holding information like visibility and transformations, which is applied to live geometry in real time.},

keywords = {augmented reality, HCI, mixed reality},

pubstate = {published},

tppubtype = {inproceedings}

}

2017

Maps of Visual Importance (2017)

arXiv preprint arXiv:1712.02142

Maps of Visual Importance (2017)

arXiv preprint arXiv:1712.02142

@online{Visualimportance,

title = {Maps of Visual Importance},

author = {Xi Wang and Marc Alexa},

url = {https://arxiv.org/abs/1712.02142},

year = {2017},

date = {2017-12-06},

organization = {arXiv preprint arXiv:1712.02142},

abstract = {The importance of an element in a visual stimulus is commonly associated with the fixations during a free-viewing task. We argue that fixations are not always correlated with attention or awareness of visual objects. We suggest to filter the fixations recorded during exploration of the image based on the fixations recorded during recalling the image against a neutral background. This idea exploits that eye movements are a spatial index into the memory of a visual stimulus. We perform an experiment in which we record the eye movements of 30 observers during the presentation and recollection of 100 images. The locations of fixations during recall are only qualitatively related to the fixations during exploration. We develop a deformation mapping technique to align the fixations from recall with the fixation during exploration. This allows filtering the fixations based on proximity and a threshold on proximity provides a convenient slider to control the amount of filtering. Analyzing the spatial histograms resulting from the filtering procedure as well as the set of removed fixations shows that certain types of scene elements, which could be considered irrelevant, are removed. In this sense, they provide a measure of importance of visual elements for human observers.},

keywords = {eye tracking, mental imagery, Visual Saliency},

pubstate = {published},

tppubtype = {online}

}

Localized solutions of sparse linear systems for geometry processing (2017)

ACM Transactions on Graphics (TOG)

Localized solutions of sparse linear systems for geometry processing (2017)

ACM Transactions on Graphics (TOG)

@article{Herholz:2017,

title = {Localized solutions of sparse linear systems for geometry processing},

author = {Philipp Herholz and Timothy A. Davis and Marc Alexa},

url = {https://dl.acm.org/authorize?N668657, ACM Authorized Paper},

doi = {10.1145/3130800.3130849},

year = {2017},

date = {2017-11-06},

booktitle = {ACM Transactions on Graphics (TOG) },

journal = {ACM Transactions on Graphics},

volume = {36},

number = {6},

publisher = {ACM},

abstract = {Computing solutions to linear systems is a fundamental building block of many geometry processing algorithms. In many cases the Cholesky factorization of the system matrix is computed to subsequently solve the system, possibly for many right-hand sides, using forward and back substitution. We demonstrate how to exploit sparsity in both the right-hand side and the set of desired solution values to obtain significant speedups. The method is easy to implement and potentially useful in any scenarios where linear problems have to be solved locally. We show that this technique is useful for geometry processing operations, in particular we consider the solution of diffusion problems. All problems profit significantly from sparse computations in terms of runtime, which we demonstrate by providing timings for a set of numerical experiments.},

keywords = {geometry processing, linear solvers},

pubstate = {published},

tppubtype = {article}

}

HeatSpace: Automatic Placement of Displays by Empirical Analysis of User Behavior (2017)

HeatSpace: Automatic Placement of Displays by Empirical Analysis of User Behavior (2017)

- Andreas Fender

- David Lindlbauer

- Philipp Herholz

- Marc Alexa

- Jörg Müller

ACM Symposium on User Interface Software and Technology, UIST'17

@inproceedings{Fender2017,

title = {HeatSpace: Automatic Placement of Displays by Empirical Analysis of User Behavior},

author = {Andreas Fender and David Lindlbauer and Philipp Herholz and Marc Alexa and Jörg Müller},

url = {http://dl.acm.org/authorize?N40687, Paper

https://www.youtube.com/watch?v=9IQFY_fNz_w, Preview video

https://www.youtube.com/watch?v=pSZHUseWtj4, Video},

doi = {10.1145/3126594.3126621},

isbn = {978-1-4503-4981-9},

year = {2017},

date = {2017-10-25},

booktitle = {ACM Symposium on User Interface Software and Technology, UIST'17},

pages = {611-621 },

publisher = {ACM},

series = {UIST'17},

abstract = {We present HeatSpace, a system that records and empirically analyzes user behavior in a space and automatically suggests positions and sizes for new displays. The system uses depth cameras to capture 3D geometry and users' perspectives over time. To derive possible display placements, it calculates volumetric heatmaps describing geometric persistence and planarity of structures inside the space. It evaluates visibility of display poses by calculating a volumetric heatmap describing occlusions, position within users' field of view, and viewing angle. Optimal display size is calculated through a heatmap of average viewing distance. Based on the heatmaps and user constraints we sample the space of valid display placements and jointly optimize their positions. This can be useful when installing displays in multi-display environments such as meeting rooms, offices, and train stations.},

keywords = {display placement, HCI},

pubstate = {published},

tppubtype = {inproceedings}

}

Optically Dynamic Interfaces (2017)

Adjunct Publication of the 30th Annual ACM Symposium on User Interface Software and Technology, UIST'17 Adjunct

Optically Dynamic Interfaces (2017)

Adjunct Publication of the 30th Annual ACM Symposium on User Interface Software and Technology, UIST'17 Adjunct

@inproceedings{Lindlbauer17ODI,

title = {Optically Dynamic Interfaces},

author = {David Lindlbauer},

url = {http://dl.acm.org/authorize?N40688, Paper},

doi = {10.1145/3131785.3131840},

isbn = {978-1-4503-5419-6},

year = {2017},

date = {2017-10-22},

booktitle = {Adjunct Publication of the 30th Annual ACM Symposium on User Interface Software and Technology, UIST'17 Adjunct},

pages = {107-110 },

publisher = {ACM},

series = {UIST'17 Adjunct},

abstract = {In the virtual world, changing properties of objects such as their color, size or shape is one of the main means of communication. Objects are hidden or revealed when needed, or undergo changes in color or size to communicate importance. I am interested in how these features can be brought into the real world by modifying the optical properties of physical objects and devices, and how this dynamic appearance influences interaction and behavior. The interplay of creating functional prototypes of interactive artifacts and devices, and studying them in controlled experiments forms the basis of my research. During my research I created a three level model describing how physical artifacts and interfaces can be appropriated to allow for dynamic appearance: (1) dynamic objects, (2) augmented objects, and (3) augmented surroundings. This position paper outlines these three levels and details instantiations of each level that were created in the context of this thesis research.},

keywords = {augmented reality, augmented surroundings, dynamic appearance, HCI, optically dynamic interfaces, shape-changing interfaces, transparent displays},

pubstate = {published},

tppubtype = {inproceedings}

}

3D Eye Tracking in Monocular and Binocular Conditions (2017)

Presented at 19th European Conference on Eye Morvements (ECEM)

3D Eye Tracking in Monocular and Binocular Conditions (2017)

Presented at 19th European Conference on Eye Morvements (ECEM)

@misc{Eyetracking,

title = {3D Eye Tracking in Monocular and Binocular Conditions},

author = {Xi Wang and Marianne Maertens and Marc Alexa},

url = {https://social.hse.ru/data/2017/10/26/1157724079/ECEM_Booklet.pdf},

year = {2017},

date = {2017-08-20},

abstract = {Results of eye tracking experiments on vergence are contradictory: for example, the point of vergence has been found in front of as well as behind the target location. The point of vergence is computed by intersecting two lines associated to pupil positions. This approach requires that a fixed eye position corresponds to a straight line of targets in space. However, as long as the targets in an experiment are distributed on a surface (e.g. a monitor), the straight-line assumption cannot be validated; inconsistencies would be hidden in the model estimated during calibration procedure. We have developed an experimental setup for 3D eye tracking based on fiducial markers, whose positions are estimated using computer vision techniques. This allows us to map points in 3D space to pupil positions and, thus, test the straight-line hypothesis. In the experiment, we test both monocular and binocular viewing conditions. Preliminary results suggest that a) the monocular condition is consistent with the straight-line hypothesis and b) binocular viewing shows disparity under the monocular straight line model. This implies that binocular calibration is unsuitable for experiments about vergence. Further analysis is developing a consistent model of binocular viewing.},

howpublished = {Presented at 19th European Conference on Eye Morvements (ECEM)},

keywords = {eye tracking},

pubstate = {published},

tppubtype = {misc}

}

Directional Screens (2017)

Directional Screens (2017)

- Michal Piovarci

- Michael Wessely

- Michal Jagielski

- Marc Alexa

- Wojciech Matusik

- Piotr Didyk

Proceedings of the 1st Annual ACM Symposium on Computational Fabrication

@inproceedings{Piovarci:2017:DS,

title = {Directional Screens},

author = {Michal Piovarci and Michael Wessely and Michal Jagielski and Marc Alexa and Wojciech Matusik and Piotr Didyk},

url = {https://dl.acm.org/authorize?N655725, Paper (ACM Authorized)},

doi = {10.1145/3083157.3083162},

isbn = {978-1-4503-4999-4},

year = {2017},

date = {2017-06-12},

booktitle = {Proceedings of the 1st Annual ACM Symposium on Computational Fabrication},

pages = {1:1--1:10},

publisher = {ACM},

address = {New York, NY, USA},