Publications

Computational discrimination between natural images based on gaze during mental imagery (2020)

Scientific Reports

Computational discrimination between natural images based on gaze during mental imagery (2020)

Scientific Reports

@article{Wang:2020:CD,

title = {Computational discrimination between natural images based on gaze during mental imagery},

author = {Xi Wang and Andreas Ley and Sebastian Koch and James Hays and Kenneth Holmqvist and Marc Alexa},

url = {https://rdcu.be/b6tel, Article

http://cybertron.cg.tu-berlin.de/xiwang/mental_imagery/retrieval.html, Related Project},

doi = {10.1038/s41598-020-69807-0},

issn = {2045-2322},

year = {2020},

date = {2020-08-03},

journal = {Scientific Reports},

volume = {10},

pages = {13035},

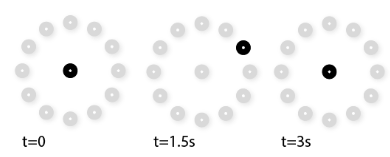

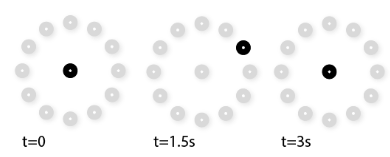

abstract = {When retrieving image from memory, humans usually move their eyes spontaneously as if the image were in front of them. Such eye movements correlate strongly with the spatial layout of the recalled image content and function as memory cues facilitating the retrieval procedure. However, how close the correlation is between imagery eye movements and the eye movements while looking at the original image is unclear so far. In this work we first quantify the similarity of eye movements between recalling an image and encoding the same image, followed by the investigation on whether comparing such pairs of eye movements can be used for computational image retrieval. Our results show that computational image retrieval based on eye movements during spontaneous imagery is feasible. Furthermore, we show that such a retrieval approach can be generalized to unseen images.},

keywords = {HCI, mental imagery, Visual attention},

pubstate = {published},

tppubtype = {article}

}

Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort (2019)

ACM Trans. Appl. Percept.

Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort (2019)

ACM Trans. Appl. Percept.

@article{DynamicRendering,

title = {Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort},

author = {Jochen Jacobs and Xi Wang and Marc Alexa},

url = {https://dl.acm.org/citation.cfm?id=3353902, Article

https://dl.acm.org/authorize?N682026, ACM Authorized Paper

},

doi = {10.1145/3353902},

year = {2019},

date = {2019-09-09},

journal = {ACM Trans. Appl. Percept. },

volume = {16},

number = {3},

pages = {16},

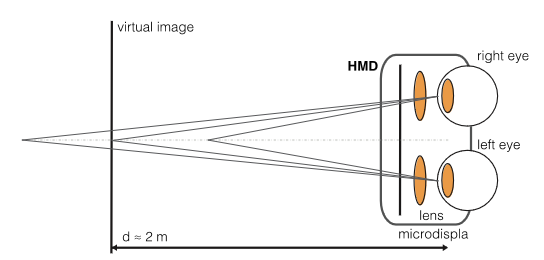

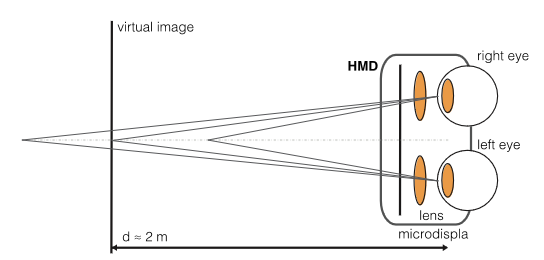

abstract = {Head-mounted displays cause discomfort. This is commonly attributed to conflicting depth cues, most prominently between vergence, which is consistent with object depth, and accommodation, which is adjusted to the near eye displays.

It is possible to adjust the camera parameters, specifically interocular distance and vergence angles, for rendering the virtual environment to minimize this conflict. This requires dynamic adjustment of the parameters based on object depth. In an experiment based on a visual search task, we evaluate how dynamic adjustment affects visual comfort compared to fixed camera parameters. We collect objective as well as subjective data. Results show that dynamic adjustment decreases common objective measures of visual comfort such as pupil diameter and blink rate by a statistically significant margin. The subjective evaluation of categories such as fatigue or eye irritation shows a similar trend but was inconclusive. This suggests that rendering with fixed camera parameters is the better choice for head-mounted displays, at least in scenarios similar to the ones used here.},

keywords = {dynamic rendering, vergence, Virtual reality},

pubstate = {published},

tppubtype = {article}

}

The mean point of vergence is biased under projection (2019)

Journal of Eye Movement Research

The mean point of vergence is biased under projection (2019)

Journal of Eye Movement Research

@article{jemr2019,

title = {The mean point of vergence is biased under projection},

author = {Xi Wang and Kenneth Holmqvist and Marc Alexa},

url = {https://bop.unibe.ch/JEMR/article/view/JEMR.12.4.2},

doi = {https://doi.org/10.16910/jemr.12.4.2},

issn = {1995-8692},

year = {2019},

date = {2019-09-09},

journal = {Journal of Eye Movement Research},

volume = {12},

number = {4},

abstract = {The point of interest in three-dimensional space in eye tracking is often computed based on intersecting the lines of sight with geometry, or finding the point closest to the two lines of sight. We first start by theoretical analysis with synthetic simulations. We show that the mean point of vergence is generally biased for centrally symmetric errors and that the bias depends on the horizontal vs. vertical error distribution of the tracked eye positions. Our analysis continues with an evaluation on real experimental data. The error distributions seem to be different among individuals but they generally leads to the same bias towards the observer. And it tends to be larger with an increased viewing distance. We also provided a recipe to minimize the bias, which applies to general computations of eye ray intersection. These findings not only have implications for choosing the calibration method in eye tracking experiments and interpreting the observed eye movements data; but also suggest to us that we shall consider the mathematical models of calibration as part of the experiment.},

keywords = {eye tracking, vergence},

pubstate = {published},

tppubtype = {article}

}

Computational discrimination between natural images based on gaze during mental imagery (2019)

Presented at 20th European Conference on Eye Morvements (ECEM)

Computational discrimination between natural images based on gaze during mental imagery (2019)

Presented at 20th European Conference on Eye Morvements (ECEM)

@misc{ecem19,

title = {Computational discrimination between natural images based on gaze during mental imagery},

author = {Xi Wang and Kenneth Holmqvist and Marc Alexa},

url = {http://ecem2019.com/media/attachments/2019/08/27/ecem_abstract_book_updated.pdf},

year = {2019},

date = {2019-08-18},

abstract = {The term “looking-at-nothing” describes the phenomenon that humans move their eyes when looking in front of an empty space. Previous studies showed that eye movements during mental imagery while looking to nothing play a functional role in memory retrieval. However, they are not a reinstatement of the eye movements while looking at the visual stimuli and are generally distorted due to the lack of reference in front of an empty space. So far it remains unclear what the degree of similarity is between eye movements during encoding and eye movements during recall.

We studied the mental imagery eye movements while looking at nothing in a lab-controlled experiment. 100 natural images were viewed and recalled by 28 observers, following the standard looking-at-nothing paradigm. We compared the basic characteristics of eye movements during both encoding and recall. Furthermore, we studies the similarity of eye movements between two conditions by asking the question: how visual imagery eye movements can be employed for computational image retrieval. Our results showed that gaze patterns in both conditions can be used to retrieve the corresponding visual stimuli. By utilizing the similarity between gaze patterns during encoding and those during recall, we showed that it is possible to generalize to new images. This study quantitatively compared the similarity between eye movements during looking at the images and those during recall them, and offers a solid method for future studies on the looking-at-nothing phenomenon. },

howpublished = {Presented at 20th European Conference on Eye Morvements (ECEM)},

keywords = {eye tracking, image retrieval, mental imagery},

pubstate = {published},

tppubtype = {misc}

}

The Mental Image Revealed by Gaze Tracking (2019)

The Mental Image Revealed by Gaze Tracking (2019)

- Xi Wang

- Andreas Ley

- Sebastian Koch

- David Lindlbauer

- James Hays

- Kenneth Holmqvist

- Marc Alexa

Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)

@conference{mentalImg,

title = {The Mental Image Revealed by Gaze Tracking},

author = {Xi Wang and Andreas Ley and Sebastian Koch and David Lindlbauer and James Hays and Kenneth Holmqvist and Marc Alexa},

url = {http://cybertron.cg.tu-berlin.de/xiwang/mental_imagery/retrieval.html, Project page

https://dl.acm.org/authorize?N681045, ACM Authorized Paper

},

doi = {10.1145/3290605.3300839},

isbn = {978-1-4503-5970-2},

year = {2019},

date = {2019-05-04},

booktitle = {Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)

},

publisher = {ACM},

abstract = {Humans involuntarily move their eyes when retrieving an image from memory. This motion is often similar to actually observing the image. We suggest to exploit this behavior as a new modality in human computer interaction, using the motion of the eyes as a descriptor of the image. Interaction requires the user's eyes to be tracked but no voluntary physical activity. We perform a controlled experiment and develop matching techniques using machine learning to investigate if images can be discriminated based on the gaze patterns recorded while users merely think about image. Our results indicate that image retrieval is possible with an accuracy significantly above chance. We also show that this result generalizes to images not used during training of the classifier and extends to uncontrolled settings in a realistic scenario.},

keywords = {eye tracking, image retrieval},

pubstate = {published},

tppubtype = {conference}

}

Center of circle after perspective transformation (2019)

arXiv preprint arXiv:1902.04541

Center of circle after perspective transformation (2019)

arXiv preprint arXiv:1902.04541

@online{concentricCircles,

title = {Center of circle after perspective transformation},

author = {Xi Wang and Albert Chern and Marc Alexa},

url = {https://arxiv.org/abs/1902.04541},

year = {2019},

date = {2019-02-12},

organization = {arXiv preprint arXiv:1902.04541 },

abstract = {Video-based glint-free eye tracking commonly estimates gaze direction based on the pupil center. The boundary of the pupil is fitted with an ellipse and the euclidean center of the ellipse in the image is taken as the center of the pupil. However, the center of the pupil is generally not mapped to the center of the ellipse by the projective camera transformation. This error resulting from using a point that is not the true center of the pupil directly affects eye tracking accuracy. We investigate the underlying geometric problem of determining the center of a circular object based on its projective image. The main idea is to exploit two concentric circles -- in the application scenario these are the pupil and the iris. We show that it is possible to computed the center and the ratio of the radii from the mapped concentric circles with a direct method that is fast and robust in practice. We evaluate our method on synthetically generated data and find that it improves systematically over using the center of the fitted ellipse. Apart from applications of eye tracking we estimate that our approach will be useful in other tracking applications.},

keywords = {eye tracking},

pubstate = {published},

tppubtype = {online}

}

Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny? (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny? (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

@article{Gaze3D,

title = {Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny?},

author = {Xi Wang and Sebastian Koch and Kenneth Holmqvist and Marc Alexa},

url = {http://cybertron.cg.tu-berlin.de/xiwang/project_saliency/3D_dataset.html, Project page

https://dl.acm.org/authorize?N688247, ACM Authorized Paper},

doi = {10.1145/3272127.3275094},

year = {2018},

date = {2018-12-03},

booktitle = {ACM Transaction on Graphics (Proc. of Siggraph Asia)},

journal = {ACM Transactions on Graphics},

volume = {37},

number = {6},

publisher = {ACM},

abstract = {We provide the first large dataset of human fixations on physical 3D objects presented in varying viewing conditions and made of different materials. Our experimental setup is carefully designed to allow for accurate calibration and measurement. We estimate a mapping from the pair of pupil positions to 3D coordinates in space and register the presented shape with the eye tracking setup. By modeling the fixated positions on 3D shapes as a probability distribution, we analysis the similarities among different conditions. The resulting data indicates that salient features depend on the viewing direction. Stable features across different viewing directions seem to be connected to semantically meaningful parts. We also show that it is possible to estimate the gaze density maps from view dependent data. The dataset provides the necessary ground truth data for computational models of human perception in 3D.},

keywords = {eye tracking, Visual Saliency},

pubstate = {published},

tppubtype = {article}

}

Maps of Visual Importance: What is recalled from visual episodic memory? (2018)

Presented at 41st European Conference on Visual Perception (ECVP)

Maps of Visual Importance: What is recalled from visual episodic memory? (2018)

Presented at 41st European Conference on Visual Perception (ECVP)

@misc{Eyetrackingb,

title = {Maps of Visual Importance: What is recalled from visual episodic memory?},

author = {Xi Wang and Kenneth Holmqvist and Marc Alexa},

year = {2018},

date = {2018-08-26},

abstract = {It has been shown that not all fixated locations in a scene are encoded in visual memory. We propose a new way to probe experimentally whether the scene content corresponding to a fixation was considered important by the observer. Our protocol is based on findings from mental imagery showing that fixation locations are reenacted during recall. We track observers' eye movements during stimulus presentation and subsequently, observers are asked to recall the visual content while looking at a neutral background. The tracked gaze locations from the two conditions are aligned using an novel elastic matching algorithm. Motivated by the hypothesis that visual content is recalled only if it has been encoded, we filter fixations from the presentation phase based on fixation locations from recall. The resulting density maps encode fixated scene elements that observers remembered, indicating importance of scene elements. We find that these maps contain topdown rather than bottom-up features.},

howpublished = {Presented at 41st European Conference on Visual Perception (ECVP)},

keywords = {eye tracking},

pubstate = {published},

tppubtype = {misc}

}

Maps of Visual Importance (2017)

arXiv preprint arXiv:1712.02142

Maps of Visual Importance (2017)

arXiv preprint arXiv:1712.02142

@online{Visualimportance,

title = {Maps of Visual Importance},

author = {Xi Wang and Marc Alexa},

url = {https://arxiv.org/abs/1712.02142},

year = {2017},

date = {2017-12-06},

organization = {arXiv preprint arXiv:1712.02142},

abstract = {The importance of an element in a visual stimulus is commonly associated with the fixations during a free-viewing task. We argue that fixations are not always correlated with attention or awareness of visual objects. We suggest to filter the fixations recorded during exploration of the image based on the fixations recorded during recalling the image against a neutral background. This idea exploits that eye movements are a spatial index into the memory of a visual stimulus. We perform an experiment in which we record the eye movements of 30 observers during the presentation and recollection of 100 images. The locations of fixations during recall are only qualitatively related to the fixations during exploration. We develop a deformation mapping technique to align the fixations from recall with the fixation during exploration. This allows filtering the fixations based on proximity and a threshold on proximity provides a convenient slider to control the amount of filtering. Analyzing the spatial histograms resulting from the filtering procedure as well as the set of removed fixations shows that certain types of scene elements, which could be considered irrelevant, are removed. In this sense, they provide a measure of importance of visual elements for human observers.},

keywords = {eye tracking, mental imagery, Visual Saliency},

pubstate = {published},

tppubtype = {online}

}

3D Eye Tracking in Monocular and Binocular Conditions (2017)

Presented at 19th European Conference on Eye Morvements (ECEM)

3D Eye Tracking in Monocular and Binocular Conditions (2017)

Presented at 19th European Conference on Eye Morvements (ECEM)

@misc{Eyetracking,

title = {3D Eye Tracking in Monocular and Binocular Conditions},

author = {Xi Wang and Marianne Maertens and Marc Alexa},

url = {https://social.hse.ru/data/2017/10/26/1157724079/ECEM_Booklet.pdf},

year = {2017},

date = {2017-08-20},

abstract = {Results of eye tracking experiments on vergence are contradictory: for example, the point of vergence has been found in front of as well as behind the target location. The point of vergence is computed by intersecting two lines associated to pupil positions. This approach requires that a fixed eye position corresponds to a straight line of targets in space. However, as long as the targets in an experiment are distributed on a surface (e.g. a monitor), the straight-line assumption cannot be validated; inconsistencies would be hidden in the model estimated during calibration procedure. We have developed an experimental setup for 3D eye tracking based on fiducial markers, whose positions are estimated using computer vision techniques. This allows us to map points in 3D space to pupil positions and, thus, test the straight-line hypothesis. In the experiment, we test both monocular and binocular viewing conditions. Preliminary results suggest that a) the monocular condition is consistent with the straight-line hypothesis and b) binocular viewing shows disparity under the monocular straight line model. This implies that binocular calibration is unsuitable for experiments about vergence. Further analysis is developing a consistent model of binocular viewing.},

howpublished = {Presented at 19th European Conference on Eye Morvements (ECEM)},

keywords = {eye tracking},

pubstate = {published},

tppubtype = {misc}

}

Accuracy of Monocular Gaze Tracking on 3D Geometry (2017)

Eye Tracking and Visualization

Accuracy of Monocular Gaze Tracking on 3D Geometry (2017)

Eye Tracking and Visualization

@inbook{Wang2017,

title = {Accuracy of Monocular Gaze Tracking on 3D Geometry},

author = {Xi Wang and David Lindlbauer and Christian Lessig and Marc Alexa},

editor = {Michael Burch and Lewis Chuang and Brian Fisher and Albrecht Schmidt and Daniel Weiskopf},

url = {http://www.springer.com/de/book/9783319470238},

isbn = {978-3-319-47023-8},

year = {2017},

date = {2017-01-29},

booktitle = {Eye Tracking and Visualization},

publisher = {Springer},

chapter = {10},

abstract = {Many applications such as data visualization or object recognition benefit from accurate knowledge of where a person is looking at. We present a system for accurately tracking gaze positions on a three dimensional object using a monocular head mounted eye tracker. We accomplish this by (1) using digital manufacturing to create stimuli whose geometry is know to high accuracy, (2) embedding fiducial markers into the manufactured objects to reliably estimate the rigid transformation of the object, and, (3) using a perspective model to relate pupil positions to 3D locations. This combination enables the efficient and accurate computation of gaze position on an object from measured pupil positions. We validate the of our system experimentally, achieving an angular resolution of 0.8 degree and a 1.5 % depth error using a simple calibration procedure with 11 points.},

keywords = {eye tracking},

pubstate = {published},

tppubtype = {inbook}

}

Measuring Visual Salience of 3D Printed Objects (2016)

IEEE Computer Graphics and Applications Special Issue on Quality Assessment and Perception in Computer Graphics

Measuring Visual Salience of 3D Printed Objects (2016)

IEEE Computer Graphics and Applications Special Issue on Quality Assessment and Perception in Computer Graphics

@article{Wang2016,

title = {Measuring Visual Salience of 3D Printed Objects},

author = {Xi Wang and David Lindlbauer and Christian Lessig and Marianne Maertens and Marc Alexa},

url = {http://cybertron.cg.tu-berlin.de/xiwang/project_saliency/index.html, Project page

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?arnumber=7478427&newsearch=true&queryText=Measuring%20Visual%20Salience%20of%203D%20Printed%20Objects, PDF

http://cybertron.cg.tu-berlin.de/xiwang/visual_salience_video.mp4, Video},

doi = {10.1109/MCG.2016.47},

issn = {0272-1716},

year = {2016},

date = {2016-05-25},

journal = {IEEE Computer Graphics and Applications Special Issue on Quality Assessment and Perception in Computer Graphics },

abstract = {We investigate human viewing behavior on physical realizations of 3D objects. Using an eye tracker with scene camera and fiducial markers we are able to gather fixations on the surface of the presented stimuli. This data is used to validate assumptions regarding visual saliency so far only experimentally analyzed using flat stimuli. We provide a way to compare fixation sequences from different subjects as well as a model for generating test sequences of fixations unrelated to the stimuli. This way we can show that human observers agree in their fixations for the same object under similar viewing conditions – as expected based on similar results for flat stimuli. We also develop a simple procedure to validate computational models for visual saliency of 3D objects and use it to show that popular models of mesh salience based on the center surround patterns fail to predict fixations.},

keywords = {3D Printing, eye tracking, Mesh Saliency, Visual Saliency},

pubstate = {published},

tppubtype = {article}

}

Accuracy of Monocular Gaze Tracking on 3D Geometry (2015)

Workshop on Eye Tracking and Visualization (ETVIS) co-located with IEEE VIS

Accuracy of Monocular Gaze Tracking on 3D Geometry (2015)

Workshop on Eye Tracking and Visualization (ETVIS) co-located with IEEE VIS

@incollection{Wang2015,

title = {Accuracy of Monocular Gaze Tracking on 3D Geometry},

author = {Xi Wang and David Lindlbauer and Christian Lessig and Marc Alexa},

url = {http://cybertron.cg.tu-berlin.de/xiwang/project_saliency/index.html, Project page

http://www.vis.uni-stuttgart.de/etvis/ETVIS_2015.html, PDF},

year = {2015},

date = {2015-10-25},

booktitle = {Workshop on Eye Tracking and Visualization (ETVIS) co-located with IEEE VIS},

abstract = {Many applications in visualization benefit from accurate knowledge of where a person is looking at. We present a system for accurately tracking gaze positions on a three dimensional object using a monocular head mounted eye tracker. We accomplish this by 1) using digital manufacturing to create stimuli with accurately known geometry, 2) embedding fiducial markers directly into the manufactured objects to reliably estimate the rigid transformation of the object, and, 3) using a perspective model to relate pupil positions to 3D locations. This combination enables the efficient and accurate computation of gaze position on an object from measured pupil positions. We validate the accuracy of our system experimentally, achieving an angular resolution of 0.8◦ and a 1.5%

depth error using a simple calibration procedure with 11 points.},

keywords = {accuracy, calibration, eye tracking},

pubstate = {published},

tppubtype = {incollection}

}

Computational discrimination between natural images based on gaze during mental imagery (2020)

Scientific Reports

Computational discrimination between natural images based on gaze during mental imagery (2020)

Scientific Reports

Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort (2019)

ACM Trans. Appl. Percept.

Keep It Simple: Depth-based Dynamic Adjustment of Rendering for Head-mounted Displays Decreases Visual Comfort (2019)

ACM Trans. Appl. Percept.

The mean point of vergence is biased under projection (2019)

Journal of Eye Movement Research

The mean point of vergence is biased under projection (2019)

Journal of Eye Movement Research

Computational discrimination between natural images based on gaze during mental imagery (2019)

Presented at 20th European Conference on Eye Morvements (ECEM)

Computational discrimination between natural images based on gaze during mental imagery (2019)

Presented at 20th European Conference on Eye Morvements (ECEM)

The Mental Image Revealed by Gaze Tracking (2019)

Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)

The Mental Image Revealed by Gaze Tracking (2019)

Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19)

Center of circle after perspective transformation (2019)

arXiv preprint arXiv:1902.04541

Center of circle after perspective transformation (2019)

arXiv preprint arXiv:1902.04541

Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny? (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny? (2018)

ACM Transaction on Graphics (Proc. of Siggraph Asia)

Maps of Visual Importance: What is recalled from visual episodic memory? (2018)

Presented at 41st European Conference on Visual Perception (ECVP)

Maps of Visual Importance: What is recalled from visual episodic memory? (2018)

Presented at 41st European Conference on Visual Perception (ECVP)

Maps of Visual Importance (2017)

arXiv preprint arXiv:1712.02142

Maps of Visual Importance (2017)

arXiv preprint arXiv:1712.02142

3D Eye Tracking in Monocular and Binocular Conditions (2017)

Presented at 19th European Conference on Eye Morvements (ECEM)

3D Eye Tracking in Monocular and Binocular Conditions (2017)

Presented at 19th European Conference on Eye Morvements (ECEM)

Accuracy of Monocular Gaze Tracking on 3D Geometry (2017)

Eye Tracking and Visualization

Accuracy of Monocular Gaze Tracking on 3D Geometry (2017)

Eye Tracking and Visualization

Measuring Visual Salience of 3D Printed Objects (2016)

IEEE Computer Graphics and Applications Special Issue on Quality Assessment and Perception in Computer Graphics

Measuring Visual Salience of 3D Printed Objects (2016)

IEEE Computer Graphics and Applications Special Issue on Quality Assessment and Perception in Computer Graphics

Accuracy of Monocular Gaze Tracking on 3D Geometry (2015)

Workshop on Eye Tracking and Visualization (ETVIS) co-located with IEEE VIS

Accuracy of Monocular Gaze Tracking on 3D Geometry (2015)

Workshop on Eye Tracking and Visualization (ETVIS) co-located with IEEE VIS